I have a bunch of image-snippets with low contrast which I'd like to binarize using python.

I tried varies thresholding methods like Otsu and Huang, but none seems to work for all my image snippets.

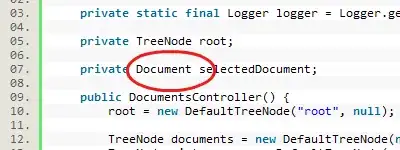

Following instructions like this one, I puzzled together the code below:

import cv2

import numpy as np

from matplotlib import pyplot as plt

import math

import glob

import os.path

import os

def permissions(targetfile):

os.chmod(targetfile, mode=0o755)

os.chown(targetfile, 1000, 1000)

#resize snippet

def resize( image):

image_resized = cv2.resize(image, None, fx=12, fy=12)

return image_resized

#Apply clahe

def clahe( image):

# Clahe parameters

cl1 = 6

cl2 = 9

cl3 = 9

image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

clahe = cv2.createCLAHE(clipLimit=cl1, tileGridSize=(cl2, cl3))

cv_gray_clahe = clahe.apply(image_gray)

return cv_gray_clahe

# Binarize image using Huangs method (https://github.com/dnhkng/Huang-Thresholding)

def binarize( image):

# image = np.array(image) # image needs to be of class 'numpy.ndarray'

histogram, bin_edges = np.histogram(image, bins=range(257))

huang_threshold = Huang(histogram)

huang_threshold = huang_threshold

threshold = np.where(image > huang_threshold, 1, 0)

threshold = threshold.astype(np.uint8)

return threshold

def Huang(data):

"""Implements Huang's fuzzy thresholding method

Uses Shannon's entropy function (one can also use Yager's entropy function)

Huang L.-K. and Wang M.-J.J. (1995) "Image Thresholding by Minimizing

the Measures of Fuzziness" Pattern Recognition, 28(1): 41-51"""

threshold=-1

first_bin= 0

for ih in range(254):

if data[ih] != 0:

first_bin = ih

break

last_bin=254;

for ih in range(254,-1,-1):

if data[ih] != 0:

last_bin = ih

break

term = 1.0 / (last_bin - first_bin)

# print (first_bin, last_bin, term)

mu_0 = np.zeros(shape=(254,1))

num_pix = 0.0

sum_pix = 0.0

for ih in range(first_bin,254):

sum_pix = sum_pix + (ih * data[ih])

num_pix = num_pix + data[ih]

mu_0[ih] = sum_pix / num_pix # NUM_PIX cannot be zero !

mu_1 = np.zeros(shape=(254,1))

num_pix = 0.0

sum_pix = 0.0

for ih in range(last_bin, 1, -1 ):

sum_pix = sum_pix + (ih * data[ih])

num_pix = num_pix + data[ih]

mu_1[ih-1] = sum_pix / num_pix # NUM_PIX cannot be zero !

min_ent = float("inf")

for it in range(254):

ent = 0.0

for ih in range(it):

# Equation (4) in Reference

mu_x = 1.0 / ( 1.0 + term * math.fabs( ih - mu_0[it]))

if ( not ((mu_x < 1e-06 ) or (mu_x > 0.999999))):

# Equation (6) & (8) in Reference

ent = ent + data[ih] * (-mu_x * math.log(mu_x) - (1.0 - mu_x) * math.log(1.0 - mu_x) )

for ih in range(it + 1, 254):

# Equation (4) in Ref. 1 */

mu_x = 1.0 / (1.0 + term * math.fabs( ih - mu_1[it]))

if ( not((mu_x < 1e-06 ) or ( mu_x > 0.999999))):

# Equation (6) & (8) in Reference

ent = ent + data[ih] * (-mu_x * math.log(mu_x) - (1.0 - mu_x) * math.log(1.0 - mu_x) )

if (ent < min_ent):

min_ent = ent

threshold = it

# print ("min_ent, threshold ", min_ent, threshold)

return threshold

#Inputfiles:

path = glob.glob("./" + "*.JPG")

path.extend(glob.glob("./" + "*.jpg"))

#Output directory

targetdir = "./output/"

os.makedirs( targetdir, exist_ok=True)

permissions(targetdir)

for img in path:

poststring = ""

targetfile = targetdir + os.path.basename(img).split('.')[0] + poststring + \

os.path.splitext(img)[1]

# Change filename of targetfile

if not os.path.exists(targetfile):

print("Processing targetfile: ", targetfile)

# read image and resize

image = cv2.imread(img)

resized_image = resize(image)

#clahe

clahe_image = clahe(resized_image)

denoised_image = cv2.fastNlMeansDenoising(clahe_image, h = 21, templateWindowSize = 9, searchWindowSize = 21)

#huang thresholding

binarized_image = binarize( denoised_image)

binarized_image *= 255

# dilate

kernel = np.ones((12,12),np.uint8)

dilate = cv2.dilate(binarized_image,kernel,iterations=3)

# Flood fill

h, w = image.shape[:2]

for row in range(h):

if dilate[row, 0] == 255:

cv2.floodFill(dilate, None, (0, row), 0)

if dilate[row, w-1] == 255:

cv2.floodFill(dilate, None, (w-1, row), 0)

for col in range(w):

if dilate[0, col] == 255:

cv2.floodFill(dilate, None, (col, 0), 0)

if dilate[h-1, col] == 255:

cv2.floodFill(dilate, None, (col, h-1), 0)

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (7,7))

foreground = cv2.morphologyEx(dilate, cv2.MORPH_OPEN, kernel)

foreground = cv2.morphologyEx(foreground, cv2.MORPH_CLOSE, kernel)

# Creating background

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (17, 17))

background = cv2.dilate(foreground, kernel, iterations=3)

cv2.imwrite(targetfile, background)

permissions(targetfile)

else:

print("Skipping, because already existing: ", targetfile)

permissions(targetfile)

print('')

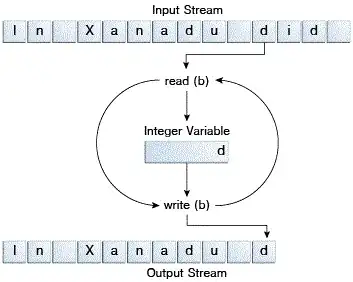

The result is still not satisfying:

Could you please advise on how to loose the noise, maintain the desired features and receive straight/ellipse-like contourlines?

Adding the original snippets here for testing purposes: download snippets