I'm working on a UI where my task is to develop UI which shows the correct 'NFT Class' based on the 'Floor Price'. This is the code I'm using:

function NFTclass(){

try{

if(((EthValue * DollarValue).toPrecision(2)) >= '40.00'){

setnftclasses('Class A NFT')

} else if(((EthValue * DollarValue).toPrecision(2)) >= '30.00'){

setnftclasses('Class B NFT')

} else if(((EthValue * DollarValue).toPrecision(2)) >= '20.00'){

setnftclasses('Class C NFT')

} else if(((EthValue * DollarValue).toPrecision(2)) >= '10.00'){

setnftclasses('Class D NFT')

} else if(((EthValue * DollarValue).toPrecision(2)) < '10.00'){

setnftclasses('No Class Determined')

}

} catch(e) {

console.log(e)

}

}

((EthValue * DollarValue).toPrecision(2)) equals to Floor Price.

As you can tell based on the Floor Price I want the UI to display what class NFT belongs to. But I'm having troubles. The code works perfect if ((EthValue * DollarValue).toPrecision(2)) returns double digits output.

But if it returns a single digit output, code doesn't returns the correct Class.

I think it's declaring the Class from just reading the first digit not the first two.

My question is what can I do that would make the code to read both numbers before the decimal before executing the code.

And also, here's another problem. While testing on one example, where the Floor Price is 2+ETH, it shows incorrect Class.

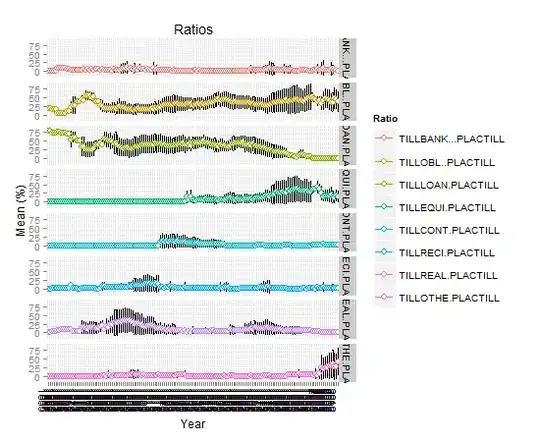

Here's the screenshot

Now if I think it is in fact declaring the class from just reading the first digit, the code should have declared this NFT Class C not Class D in this screenshot. Again not sure what am I doing wrong.