One potential solution is to convert your for-loop into a function and use future_lapply() from the future.apply package for each disease_state, e.g.

#install.packages("eulerr")

library(eulerr)

#BiocManager::install("microbiome")

library(microbiome)

#devtools::install_github("microsud/microbiomeutilities")

library(microbiomeutilities)

library(future.apply)

#> Loading required package: future

library(profvis)

data("zackular2014")

pseq <- zackular2014

table(meta(pseq)$DiseaseState, useNA = "always")

#>

#> CRC H nonCRC <NA>

#> 30 30 28 0

pseq.rel <- microbiome::transform(pseq, "compositional")

disease_states <- unique(as.character(meta(pseq.rel)$DiseaseState))

# Original for loop method:

list_core <- c() # an empty object to store information

for (n in disease_states){ # for each variable n in DiseaseState

#print(paste0("Identifying Core Taxa for ", n))

ps.sub <- subset_samples(pseq.rel, DiseaseState == n) # Choose sample from DiseaseState by n

core_m <- core_members(ps.sub, # ps.sub is phyloseq selected with only samples from g

detection = 0.001, # 0.001 in atleast 90% samples

prevalence = 0.75)

print(paste0("No. of core taxa in ", n, " : ", length(core_m))) # print core taxa identified in each DiseaseState.

list_core[[n]] <- core_m

}

#> [1] "No. of core taxa in nonCRC : 11"

#> [1] "No. of core taxa in CRC : 8"

#> [1] "No. of core taxa in H : 14"

mycols <- c(nonCRC="#d6e2e9", CRC="#cbf3f0", H="#fcf5c7")

plot(venn(list_core),

fills = mycols)

## multicore future_lapply method:

# make your loop into a function

run_each_disease_state <- function(disease_state) {

assign("disease_state", disease_state, envir=globalenv())

ps.sub <- subset_samples(pseq.rel,

DiseaseState == disease_state)

core_m <- core_members(ps.sub,

detection = 0.001,

prevalence = 0.75)

print(paste0("No. of core taxa in ", disease_state,

" : ", length(core_m)))

return(core_m)

}

#plan(multisession)

list_core <- future_lapply(disease_states, run_each_disease_state)

#> [1] "No. of core taxa in nonCRC : 11"

#> [1] "No. of core taxa in CRC : 8"

#> [1] "No. of core taxa in H : 14"

names(list_core) <- disease_states

mycols <- c(nonCRC="#d6e2e9", CRC="#cbf3f0", H="#fcf5c7")

plot(venn(list_core),

fills = mycols)

## profiling both methods

p1 <- profvis({

list_core <- c() # an empty object to store information

for (n in disease_states){ # for each variable n in DiseaseState

#print(paste0("Identifying Core Taxa for ", n))

ps.sub <- subset_samples(pseq.rel, DiseaseState == n) # Choose sample from DiseaseState by n

core_m <- core_members(ps.sub, # ps.sub is phyloseq selected with only samples from g

detection = 0.001, # 0.001 in atleast 90% samples

prevalence = 0.75)

print(paste0("No. of core taxa in ", n, " : ", length(core_m))) # print core taxa identified in each DiseaseState.

list_core[[n]] <- core_m

}

mycols <- c(nonCRC="#d6e2e9", CRC="#cbf3f0", H="#fcf5c7")

plot(venn(list_core),

fills = mycols)

})

#> [1] "No. of core taxa in nonCRC : 11"

#> [1] "No. of core taxa in CRC : 8"

#> [1] "No. of core taxa in H : 14"

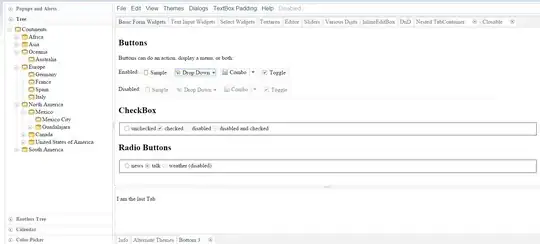

htmlwidgets::saveWidget(p1, "singlecore.html")

browseURL("singlecore.html")

p2 <- profvis({

# make your loop into a function

run_each_disease_state <- function(disease_state) {

assign("disease_state", disease_state, envir=globalenv())

ps.sub <- subset_samples(pseq.rel,

DiseaseState == disease_state)

core_m <- core_members(ps.sub,

detection = 0.001,

prevalence = 0.75)

print(paste0("No. of core taxa in ", disease_state,

" : ", length(core_m)))

return(core_m)

}

list_core <- future_lapply(disease_states, run_each_disease_state)

names(list_core) <- disease_states

mycols <- c(nonCRC="#d6e2e9", CRC="#cbf3f0", H="#fcf5c7")

plot(venn(list_core),

fills = mycols)

})

#> [1] "No. of core taxa in nonCRC : 11"

#> [1] "No. of core taxa in CRC : 8"

#> [1] "No. of core taxa in H : 14"

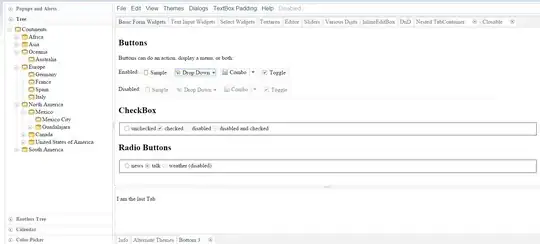

htmlwidgets::saveWidget(p2, "multicore.html")

browseURL("multicore.html")

Created on 2022-10-25 by the reprex package (v2.0.1)

This cuts your execution time from ~130ms to ~60ms for this example (singlecore.html vs multicore.html), but the time-saving depends on the actual code you're running and the amount of time required to serialise and unserialise the chunks of your data.