I am calculating the duration of the data acquisition from some sensors. Although the data is collected faster, I would like to sample it at 10Hz. Anyways, I created a dataframe with a column called 'Time_diff' which I expect it goes [0.0, 0.1, 0.2, 0.3 ...]. However it goes somehow like [0.0, 0.1, 0.2, 0.30000004 ...]. I am rounding the data frame but still, I have this weird decimation. Is there any suggestions on how to fix it?

The code:

for i in range(self.n_of_trials):

start = np.zeros(0)

stop = np.zeros(0)

for df in self.trials[i].df_list:

start = np.append(stop, df['Time'].iloc[0])

stop = np.append(start, df['Time'].iloc[-1])

t_start = start.min()

t_stop = stop.max()

self.trials[i].duration = t_stop-t_start

t = np.arange(0, self.trials[i].duration+self.trials[i].dt, self.trials[i].dt)

self.trials[i].df_merged['Time_diff'] = t

self.trials[i].df_merged.round(1)

when I print the data it looks like this:

0 0.0

1 0.1

2 0.2

3 0.3

4 0.4

...

732 73.2

733 73.3

734 73.4

735 73.5

736 73.6

Name: Time_diff, Length: 737, dtype: float64

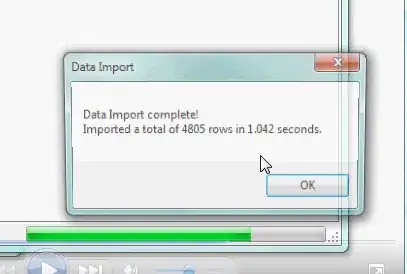

However when I open as csv file it is like that:

Addition

I think the problem is not csv conversion but how the float data converted/rounded. Here is the next part of the code where I merge more dataframes on 10Hz time stamps:

for j in range(len(self.trials[i].df_list)):

df = self.trials[i].df_list[j]

df.insert(0, 'Time_diff', round(df['Time']-t_start, 1))

df.round({'Time_diff': 1})

df.drop_duplicates(subset=['Time_diff'], keep='first', inplace=True)

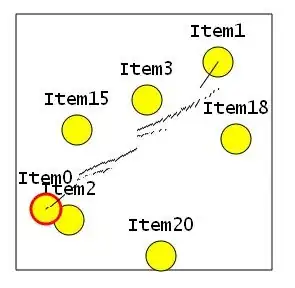

self.trials[i].df_merged = pd.merge(self.trials[i].df_merged, df, how="outer", on="Time_diff", suffixes=(None, '_'+self.trials[i].df_list_names[j]))

#Test csv

self.trials[2].df_merged.to_csv(path_or_buf='merged.csv')

And since the inserted dataframes have exact correct decimation, it is not merged properly and create another instance with a new index.