I am trying to import an unstructured csv from datalake storage to databricks and i want to read the entire content of this file:

EdgeMaster

Name Value Unit Status Nom. Lower Upper Description

Type A A

Date 1/1/2022 B

Time 0:00:00 A

X 1 m OK 1 2 3 B

Y - A

EdgeMaster

Name Value Unit Status Nom. Lower Upper Description

Type B C

Date 1/1/2022 D

Time 0:00:00 C

X 1 m OK 1 2 3 D

Y - C

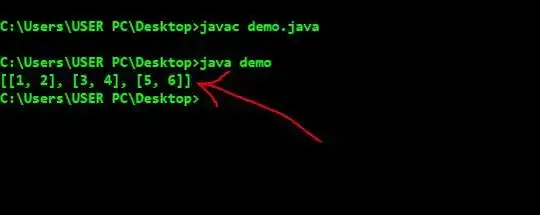

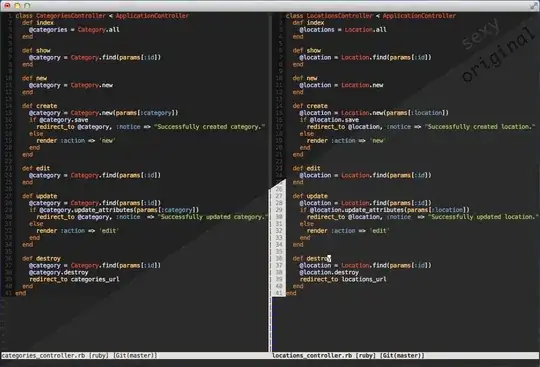

1. Method 1 : I tried reading the first line a header

df = sqlContext.read.format("com.databricks.spark.csv").option("header", "true").load('abfss://xyz/sample.csv')

2. Method 2: I skipped reading header

3. Method 3: Defined a custom schema