What we are trying:

We are trying to Run a Cloud Run Job that does some computation and also uses one our custom package to do the computation. The cloud run job is using google-cloud-logging and python's default logging package as described here. The custom python package also logs its data (only logger is defined as suggested here).

Simple illustration:

from google.cloud import logging as gcp_logging

import logging

import os

import google.auth

from our_package import do_something

def log_test_function():

SCOPES = ["https://www.googleapis.com/auth/cloud-platform"]

credentials, project_id = google.auth.default(scopes=SCOPES)

try:

function_logger_name = os.getenv("FUNCTION_LOGGER_NAME")

logging_client = gcp_logging.Client(credentials=credentials, project=project_id)

logging.basicConfig()

logger = logging.getLogger(function_logger_name)

logger.setLevel(logging.INFO)

logging_client.setup_logging(log_level=logging.INFO)

logger.critical("Critical Log TEST")

logger.error("Error Log TEST")

logger.info("Info Log TEST")

logger.debug("Debug Log TEST")

result = do_something()

logger.info(result)

except Exception as e:

print(e) # just to test how print works

return "Returned"

if __name__ == "__main__":

result = log_test_function()

print(result)

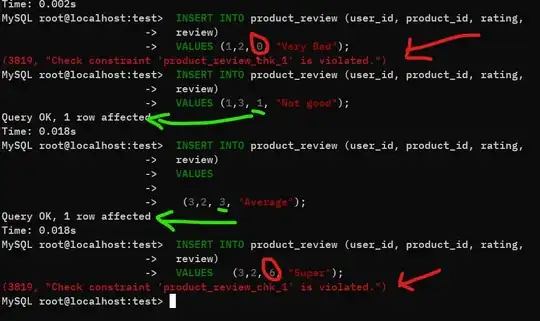

The Blue Box indicates logs from custom package

The Black Box indicates logs from Cloud Run Job

The Cloud Logging is not able to identify the severity of logs. It parses every log entry as default level.

But if I run same code in Cloud Function, it seems to work as expected (i.e. severity level of logs from cloud function and custom package is respected) as shown in image below.

Both are serverless architecture than why does it works in Cloud Function but not in Cloud Run.

What we want to do:

We want to log every message from Cloud Run Job and custom package to Cloud Logging with correct severity.

We would appreciate your help guys!

Edit 1

Following Google Cloud Python library commiters solution. Almost solved the problem. Following is the modified code.

from google.cloud import logging as gcp_logging

import logging

import os

import google.auth

from our_package import do_something

from google.cloud.logging.handlers import CloudLoggingHandler

from google.cloud.logging_v2.handlers import setup_logging

from google.cloud.logging_v2.resource import Resource

from google.cloud.logging_v2.handlers._monitored_resources import retrieve_metadata_server, _REGION_ID, _PROJECT_NAME

def log_test_function():

SCOPES = ["https://www.googleapis.com/auth/cloud-platform"]

region = retrieve_metadata_server(_REGION_ID)

project = retrieve_metadata_server(_PROJECT_NAME)

try:

function_logger_name = os.getenv("FUNCTION_LOGGER_NAME")

# build a manual resource object

cr_job_resource = Resource(

type="cloud_run_job",

labels={

"job_name": os.environ.get('CLOUD_RUN_JOB', 'unknownJobId'),

"location": region.split("/")[-1] if region else "",

"project_id": project

}

)

logging_client = gcp_logging.Client()

gcloud_logging_handler = CloudLoggingHandler(logging_client, resource=cr_job_resource)

setup_logging(gcloud_logging_handler, log_level=logging.INFO)

logging.basicConfig()

logger = logging.getLogger(function_logger_name)

logger.setLevel(logging.INFO)

logger.critical("Critical Log TEST")

logger.error("Error Log TEST")

logger.warning("Warning Log TEST")

logger.info("Info Log TEST")

logger.debug("Debug Log TEST")

result = do_something()

logger.info(result)

except Exception as e:

print(e) # just to test how print works

return "Returned"

if __name__ == "__main__":

result = log_test_function()

print(result)

Now the every log is logged twice one severity sensitive log other severity insensitive logs at "default" level as shown below.