I'm new on the OCR world and I have document with numbers to analyse with Python, openCV and pytesserract. The files I received are pdfs and the numbers are not text. So, I converted it to jpg with this :

first_page = convert_from_path(path__to_pdf, dpi=600, first_page=1, last_page=1)

first_page[0].save(TEMP_FOLDER+'temp.jpg', 'JPEG')

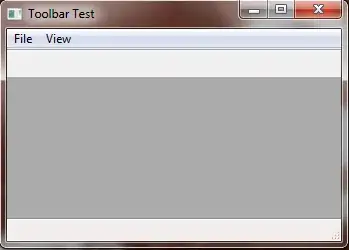

Then , the images look like this : I still have some noise around the digits.

I tried to select the "black color only" with this :

img_hsv = cv2.cvtColor(img_raw, cv2.COLOR_BGR2HSV)

img_changing = cv2.cvtColor(img_raw, cv2.COLOR_RGB2GRAY)

low_color = np.array([0, 0, 0])

high_color = np.array([180, 255, 30])

blackColorMask = cv2.inRange(img_hsv, low_color, high_color)

img_inversion = cv2.bitwise_not(img_changing)

img_black_filtered = cv2.bitwise_and(img_inversion, img_inversion, mask = blackColorMask)

img_final_inversion = cv2.bitwise_not(img_black_filtered)

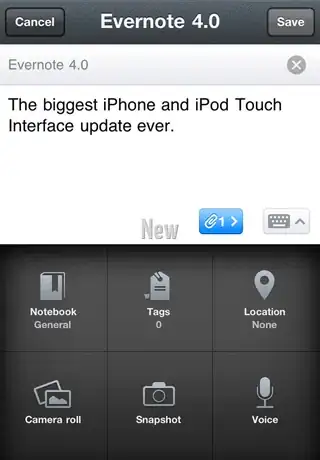

So, with this code, my image looks like this :

Even with cv2.blur, I don't even reach 75% of image FULLY analysed. For at least 25% of the images, pytesseract misses 1 or more digits. Is that normal ? Do you have ideas of what I can do to maximize the succesfull rate ?

Thanks