I have been experimenting with performance of operation in Numpy and it turns out that performing element-wise operation (for example np.multiply) on matrices with inconsistent memory layout (one with order 'C' and second 'F') is around 2 times slower when compared to analogous operation on matrices with matching memory layout. This is only to be expected since memory local referencing has to be taken into account.

What surprises me is that when those matrices have dimensions being multiplication of big power of two the penalty of operation performed on memory inconsistent data is much larger (being 4 and more times slower than its memory matching counterpart).

To emphasize the phenomena element-wise multiplication on memory inconsistent matrices of sizes 1024x1024 is about as costly as matrices with sizes 1800x1800.

My setup (result of running lshw -short):

H/W path Device Class Description

==============================================================

system 20KN001QPB (LENOVO_MT_20KN_BU_Think_FM_ThinkPad E480)

/0 bus 20KN001QPB

/0/3 memory 16GiB System Memory

/0/3/0 memory 8GiB SODIMM DDR4 Synchronous Unbuffered (Unregistered) 2400 MHz (0,4 ns)

/0/3/1 memory 8GiB SODIMM DDR4 Synchronous Unbuffered (Unregistered) 2400 MHz (0,4 ns)

/0/7 memory 256KiB L1 cache

/0/8 memory 1MiB L2 cache

/0/9 memory 6MiB L3 cache

/0/a processor Intel(R) Core(TM) i5-8250U CPU @ 1.60GHz

/0/b memory 128KiB BIOS

/0/100 bridge Xeon E3-1200 v6/7th Gen Core Processor Host Bridge/DRAM Registers

/0/100/2 display UHD Graphics 620

/0/100/8 generic Xeon E3-1200 v5/v6 / E3-1500 v5 / 6th/7th/8th ...

Environment:

- Python 3.9.2

- numpy 1.23.4

Code to reproduce experiment:

import numpy as np

from matplotlib import pyplot as plt

from timeit import default_timer as timer

def measure_time(data, op, rep=10):

start = timer()

for i in range(rep):

op(data)

return (timer() - start) / rep

def prep_matrix(n, order='C'):

return np.ones((n, n), order=order)

def mul(data):

a, b = data

np.multiply(a, b)

times = [measure_time((prep_matrix(i), prep_matrix(i)), mul, 3) for i in range(0, 3100, 1)]

times2 = [measure_time((prep_matrix(i), prep_matrix(i, order='F')), mul, 3) for i in range(0, 3100, 1)]

plt.plot(times)

plt.plot(times2)

plt.show()

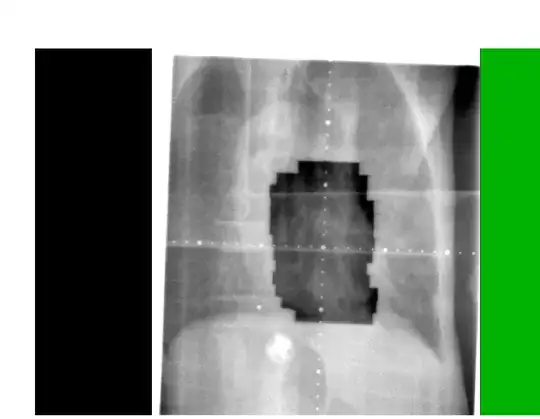

Result (In case stack won't let me publish image appropriate plot can be found here: plot):

Where orange line shows execution time in second of matrices multiplication with different memory layout (and blue one with matching layout). X-axis corresponds to size of matrices.

Where orange line shows execution time in second of matrices multiplication with different memory layout (and blue one with matching layout). X-axis corresponds to size of matrices.

One can clearly see that something suspicious is going on. But why?