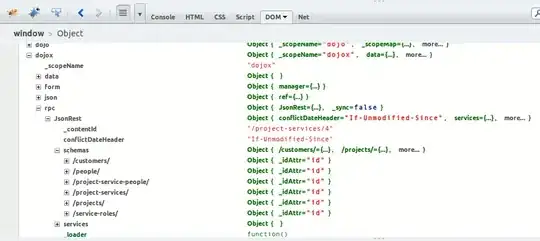

Here is my spark job stages:

It has 260000 tasks because the job rely on more then 200000 small hdfs files, each file about

50MB and it is stored in gzip format

I tried using the following settings to reduce the tasks but it didn't work.

...

--conf spark.sql.mergeSmallFileSize=10485760 \

--conf spark.hadoopRDD.targetBytesInPartition=134217728 \

--conf spark.hadoopRDD.targetBytesInPartitionInMerge=134217728 \

...

Is it because file format is gzip that made it cannot be merged?

How can I do now if I want to reduce the job tasks?