My use case is the following :

Once every day I upload 1000 single page pdf to Azure Storage and process them with Form Recognizer via python azure-form-recognizer latest client.

So far I’m using the Async version of the client and I send the 1000 coroutines concurrently.

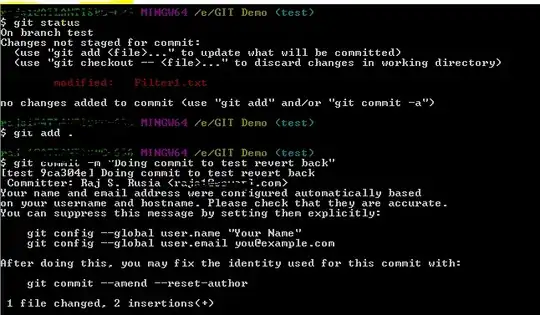

tasks = {asyncio.create_task(analyse_async(doc)): doc for doc in documents}

pending = set(tasks)

# Handle retry

while pending:

# backoff in case of 429

time.sleep(1)

# concurrent call return_when all completed

finished, pending = await asyncio.wait(

pending, return_when=asyncio.ALL_COMPLETED

)

# check if task has exception and register for new run.

for task in finished:

arg = tasks[task]

if task.exception():

new_task = asyncio.create_task(analyze_async(doc))

tasks[new_task] = doc

pending.add(new_task)

Now I’m not really comfortable with this setup. The main reason being the unpredictable successive states of the service in the same iteration. Can be up then throw 429 then up again. So not enough deterministic for me. I was wondering if another approach was possible. Do you think I should rather increase progressively the transactions. Start with 15 (default TPS) then 50 … 100 until the queue is empty ? Or another option ? Thx