I am working on a pyspark code that reads csv files and save that to the azure storage container using databricks. Writing the code to read and write the csv files seems to be straight forward using reader and writer api. As we are dealing with csv files, we have some csv files whose column headers have spaces in them, so we decided to use Column mapping functionality that databricks provide in order to support our use case. In order to make our code generic and common to read all csv files whether they have special characters or spaces in column header or not, we decided to create all the delta tables that we create using that feature.

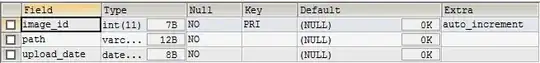

I see some odd structure on the azure storage container where the actual parquet files get created.

Image of delta table physical parquet files without using Column Mapping:

Image of delta table physical parquet files using Column Mapping:

I am not doing any partitioning in both the cases.

Just wanted to know why those random folders get created and what is the importance of those folders when someone use column mapping feature of databricks.

I have tried searching for detailed documentation around it but could not find anything fruitful.

Update:

I am using a simple create table script by providing three additional TBLProperties as mentioned in the column mapping document.

CREATE TABLE delta_table

USING DELTA

TBLPROPERTIES ('delta.minReaderVersion' = '2',

'delta.minWriterVersion' = '5',

'delta.columnMapping.mode' = 'name')

Ref Doc: https://learn.microsoft.com/en-us/azure/databricks/delta/delta-column-mapping