Question: Shouldn't threejs set the w value of vertices equal to their depth?

When projecting objects onto a screen, objects that are far away from the focal point get projected further towards the center of the screen. In projective coordinates this effect is achieved by dividing a point's (x, y, z)-coordinates by its distance from the focal point, w. I've been playing around with threejs's projection matrix and it seems to me that threejs doesn't do that.

Consider the following scene:

// src/main.ts

import { AmbientLight, BoxGeometry, DirectionalLight, Mesh,

MeshPhongMaterial, PerspectiveCamera, Scene, WebGLRenderer } from "three";

const canvas = document.getElementById('canvas') as HTMLCanvasElement;

canvas.width = canvas.clientWidth;

canvas.height = canvas.clientHeight;

const renderer = new WebGLRenderer({

alpha: false,

antialias: false,

canvas: canvas,

depth: true

});

const scene = new Scene();

const camera = new PerspectiveCamera(45, canvas.width / canvas.height, 0.01, 100);

camera.position.set(0, 0, 10);

const light = new DirectionalLight();

light.position.set(-1, 0, 3);

scene.add(light);

const light2 = new AmbientLight();

scene.add(light2);

const cube = new Mesh(new BoxGeometry(1, 1, 1, 1), new MeshPhongMaterial({ color: `rgb(0, 125, 125)` }));

scene.add(cube);

cube.position.set(3.42, 3.42, 0);

renderer.render(scene, camera);

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

</head>

<body>

<div id="app" style="width: 600px; height: 600px;">

<canvas id="canvas" style="width: 100%; height: 100%;"></canvas>

</div>

<script type="module" src="/src/main.ts"></script>

</body>

</html>

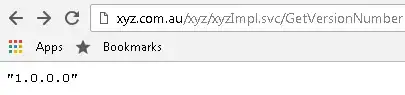

This code yields the following image:

Note how the edges of the turquoise box appear exactly parallel to the edges of the canvas. But the front-top-right vertex is further away from my eye than the front-bottom-left vertex. Shouldn't the top-right vertex be slightly distorted towards the center?

I understand that WebGL automatically divides vertices by their w coordinate in the vertex-post-processing-phase. Shouldn't threejs have used the depth to set w so that this distortion-effect is achieved?

What I imagine is something like this:

import { AmbientLight, BoxGeometry, Mesh, MeshBasicMaterial, PerspectiveCamera,

Scene, ShaderMaterial, SpotLight, TextureLoader, Vector3, WebGLRenderer } from "three";

const canvas = document.getElementById('canvas') as HTMLCanvasElement;

canvas.width = canvas.clientWidth;

canvas.height = canvas.clientHeight;

const loader = new TextureLoader();

const texture = await loader.loadAsync('bricks.jpg');

const renderer = new WebGLRenderer({

alpha: false,

antialias: true,

canvas: canvas,

depth: true

});

const scene = new Scene();

const camera = new PerspectiveCamera(45, canvas.width / canvas.height, 0.01, 100);

camera.position.set(0, 0, -1);

camera.lookAt(new Vector3(0, 0, 0));

const light = new SpotLight();

light.position.set(-1, 0, -1);

scene.add(light);

const light2 = new AmbientLight();

scene.add(light2);

const box = new Mesh(new BoxGeometry(1, 1, 1, 50, 50, 50), new MeshBasicMaterial({

map: texture

}));

scene.add(box);

box.position.set(-0.6, 0, 1);

const box2 = new Mesh(new BoxGeometry(1, 1, 1, 50, 50, 50), new ShaderMaterial({

uniforms: {

tTexture: {value: texture }

},

vertexShader: `

varying vec2 vUv;

void main() {

vUv = uv;

vec4 clipSpacePos = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );

clipSpacePos.w = length(clipSpacePos.xyz);

gl_Position = clipSpacePos;

}

`,

fragmentShader: `

varying vec2 vUv;

uniform sampler2D tTexture;

void main() {

gl_FragColor = texture2D(tTexture, vUv);

}

`,

}));

scene.add(box2);

box2.position.set(0.6, 0, 1);

renderer.render(scene, camera);

Which gives the following output:

Note how the left block displays strong distortion towards the edges.