I am trying to transform a dataframe with a column having the following string with structure:

{

Key = [Value 1, Value 2, Value 3, Value 4, Value 1, Value 2, Value 3, Value 4 ...],

Key = [Value 1, Value 2, Value 3, Value 4, Value 1, Value 2, Value 3, Value 4 ...],

}

That is represented with values as below:

{

100=[800,0,100,0,2.168675, 800,0,100,0,.4954798],

400=[800,0,400,0,3.987227, 800,0,400,0,.7282956],

4000=[3200,0,4000,0,3.112903, 3200,0,4000,0,1.850587]

}

How to transform the above string into the below dataframe with rows exploded?

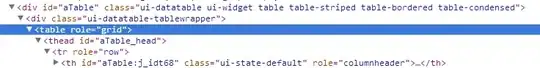

Here is the data in the data frame with the schema:

After applying the suggested solution, Unfortunately, the regex expression starting with r'.... gave an error saying "R Literals are not supported"..

Thus, I have replaced them accordingly to make it work only using the following code.

The only issue left is to remove the [ character from the Value1 Column.

If you have suggestions, appreciate it. I will select the suggested solution as accepted... Many Thanks...

import pyspark.sql.functions as F

from pyspark.sql.functions import *

data_source = "/curated/SensorMEDCurated"

df = read_delta_from_datalake (global_definitions["curated_dl_zone"], data_source)

print("Original Data:")

df.select("rms").show(1, truncate = False)

extract_all_pattern = "\'(" + "\\\\d+=\\\\[[^\\]]+\\]" + ")\'" ## \d+=\[[^\]] +\] ==> Any Integer = Any values in a bracket

df = df.withColumn("rms", F.expr(f"regexp_extract_all(rms, {extract_all_pattern}, 1)")) \

.withColumn("rms", F.explode("rms"))

df =df.withColumn("outer_value", split(df["rms"], '=').getItem(0))

df =df.withColumn("values", split(df["rms"], '=').getItem(1))

df= df.withColumn("values", F.split("values", "[\\\\s*,\\\\s*]"))

df = df.select(["outer_value"] + [F.element_at("values", i).alias(f"value{i}") for i in range(1,16)])

df.show()

Text output:

{100=[800,0,100,0,2.168675, 800,0,100,0,.4954798, 160,0,20,0,.4119049, 48,20,26,0,.1014838, 96,26,38,0,.1790891, 496,38,100,0,.1671498], 400=[800,0,400,0,3.987227, 800,0,400,0,.7282956, 210,0,105,0,.5492065, 590,105,400,0,.4716012], 4000=[3200,0,4000,0,3.112903, 3200,0,4000,0,1.850587, 82,0,102,0,.5790547, 17,102,123,0,.1790891, 408,123,633,0,.6745689, 62,633,710,0,.1910284, 405,710,1216,0,.4178745, 202,1216,1468,0,.2387854, 330,1468,1880,0,.2507247, 758,1880,2828,0,1.361077, 936,2828,3998,0,.6089029]}