I am mastering pytorch here, and decided to implement very simple 1 to 1 linear regression, from height to weight.

Got dataset: https://www.kaggle.com/datasets/mustafaali96/weight-height but any other would do nicely.

Lets import libraries and information about females:

import torch

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

import pandas as pd

import matplotlib.pyplot as plt

import numpy as np

df = pd.read_csv('weight-height.csv',sep=',')

#https://www.kaggle.com/datasets/mustafaali96/weight-height

height_f=df[df['Gender']=='Female']['Height'].to_numpy()

weight_f=df[df['Gender']=='Female']['Weight'].to_numpy()

plt.scatter(height_f, weight_f, c ="red",alpha=0.1)

plt.show()

Which gives nice scatter of measured females:

So far, so good.

Lets make Dataloader:

class Data(Dataset):

def __init__(self, X: np.ndarray, y: np.ndarray) -> None:

# need to convert float64 to float32 else

# will get the following error

# RuntimeError: expected scalar type Double but found Float

self.X = torch.from_numpy(X.reshape(-1, 1).astype(np.float32))

self.y = torch.from_numpy(y.reshape(-1, 1).astype(np.float32))

self.len = self.X.shape[0]

def __getitem__(self, index: int) -> tuple:

return self.X[index], self.y[index]

def __len__(self) -> int:

return self.len

traindata = Data(height_f, weight_f)

batch_size = 500

num_workers = 2

trainloader = DataLoader(traindata,

batch_size=batch_size,

shuffle=True,

num_workers=num_workers)

...linear regression model...

class linearRegression(torch.nn.Module):

def __init__(self, inputSize, outputSize):

super(linearRegression, self).__init__()

self.linear = torch.nn.Linear(inputSize, outputSize)

def forward(self, x):

out = self.linear(x)

return out

model = linearRegression(1, 1)

criterion = torch.nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.00001)

.. lets train it:

epochs=10

for epoch in range(epochs):

print(epoch)

for i, (inputs, labels) in enumerate(trainloader):

outputs=model(inputs)

loss = criterion(outputs, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

gives 0,1,2,3,4,5,6,7,8,9 now lets see what our model gives:

range_height_f=torch.linspace(height_f.min(),height_f.max(),150)

plt.scatter(height_f, weight_f, c ="red",alpha=0.1)

pred=model(range_height_f.reshape(-1, 1))

plt.scatter(range_height_f, pred.detach().numpy(), c ="green",alpha=0.1)

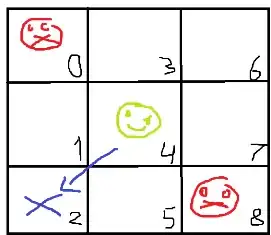

Why does it do this? Why wrong slope? consistently wrong slope, I might add Whatever I change, optimizer, batch size, epochs, females to males.. it gives me this very wrong slope, and I really don't get - why?

Edit 1: Added loss, here is plot

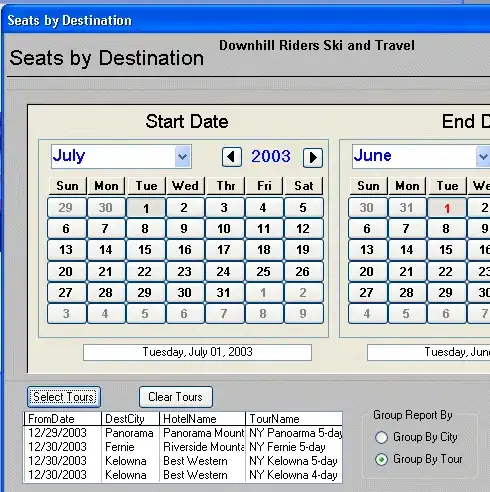

Edit 2: Have decided to explore a bit, and made regression with skilearn:

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

X_train, X_test, y_train, y_test = train_test_split(height_f, weight_f, test_size = 0.25)

regr = LinearRegression()

regr.fit(X_train.reshape(-1,1), y_train)

plt.scatter(height_f, weight_f, c ="red",alpha=0.1)

range_pred=regr.predict(range_height_f.reshape(-1, 1))

range_pred

plt.scatter(range_height_f, range_pred, c ="green",alpha=0.1)

which gives following regression, which looks nice:

t = torch.from_numpy(height_f.astype(np.float32))

p=regr.predict(t.reshape(-1,1))

p=torch.from_numpy(p).reshape(-1,1)

w= torch.from_numpy(weight_f.astype(np.float32)).reshape(-1,1)

print(criterion(p,w).item())

However in this case criterion=100.65161998527695

Pytorch in own turn converges to about 210

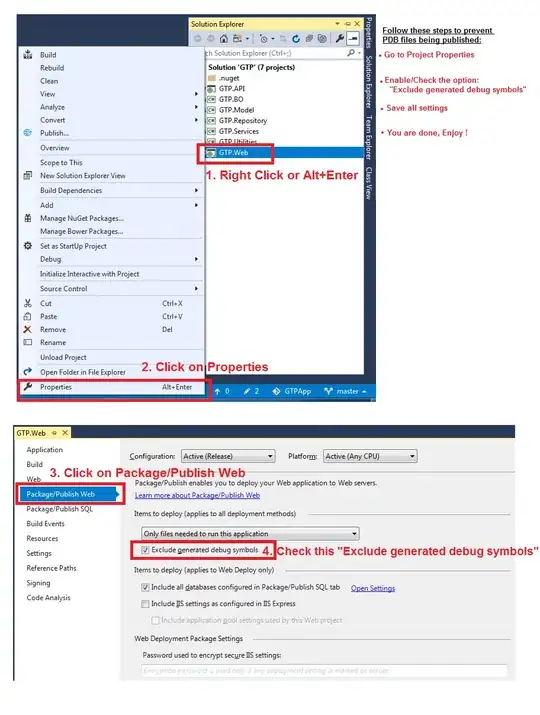

Edit 3 Changed optimisation to Adam from SGD:

#optimizer = torch.optim.SGD(model.parameters(), lr=0.00001)

optimizer = torch.optim.Adam(model.parameters(), lr=0.5)

lr is larger in this case, which yields interesting, but consistent result.

Here is loss:

,

And here is proposed regression:

,

And here is proposed regression:

And, here is log of loss criterion as well for Adam optimizer: