I'm having some trouble understanding the purpose of causal convolutions. Suppose I'm doing time-series classification using a convolutional network with 1 layer and kernel=2, stride=1, dilation=0. Isn't it the same thing as shifting my output back by 1?

For larger networks, it would be a little more involved to take into account the parameters of all the layers to get the resulting receptive field to do a proper output shift. To me it seems, if there is some leak, you could always account for the leak by shifting the output back.

For example, if at time step $t_2$, a non-causal CNN sees $x_0, x_1, x_2, x_3, x_4$, then you'd use the target associated with $t_4$, i.e. $y_4$

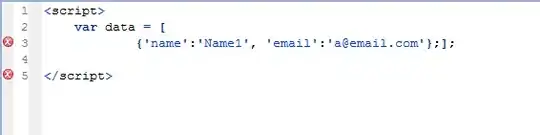

Edit: I've seen diagrams for causal CNNs where all the arrows a right-aligned. I get that it's meant to illustrate that $y_t$ aligns to $x_t$, but couldn't you just as easily draw them like this: