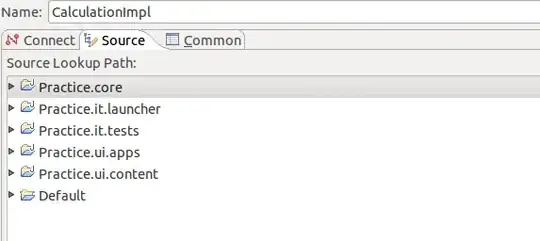

Inspired by this question and this answer (which isn't very solid) I realized that I often find myself converting to grayscale a color image that is almost grayscale (usually a color scan from a grayscale original). So I wrote a function meant to measure a kind of distance of a color image from grayscale:

import numpy as np

from PIL import Image, ImageChops, ImageOps, ImageStat

def distance_from_grey(img): # img must be a Pillow Image object in RGB mode

img_diff=ImageChops.difference(img, ImageOps.grayscale(img).convert('RGB'))

return np.array(img_diff.getdata()).mean()

img = Image.open('test.jpg')

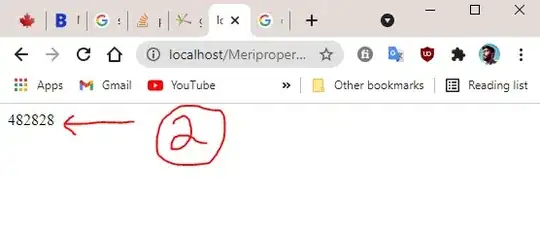

print(distance_from_grey(img))

The number obtained is the average difference among all pixels of RGB values and their grayscale value, which will be zero for a perfect grayscale image.

What I'm asking to imaging experts is:

- is this approach valid or there are better ones?

- at which distance an image can be safely converted to grayscale without checking it visually?