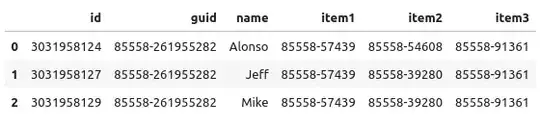

Say I have 2 dataframes:

df1

id guid name item1 item2 item3 item4 item5 item6 item7 item8 item9

0 3031958124 85558-261955282 Alonso 85558-57439 85558-54608 85558-91361 85558-40647 85558-41305 85558-79979 85558-33076 85558-89956 85558-12554

1 3031958127 85558-261955282 Jeff 85558-57439 85558-39280 85558-91361 85558-55987 85558-83083 85558-79979 85558-33076 85558-41872 85558-12554

2 3031958129 85558-261955282 Mike 85558-57439 85558-39280 85558-91361 85558-55987 85558-40647 85558-79979 85558-33076 85558-88297 85558-12534

...

df2 where item_lookup is the index

item_type cost value target

item_lookup

85558-57439 item1 9500 25.1 1.9

85558-54608 item2 8000 18.7 0.0

85558-91361 item3 7000 16.5 0.9

...

I want to add the sum of cost, value, and target for each item1 through item9 using item_lookup (df2) and store that as a column on df1.

So the result should look like: df1

id guid name item1 item2 item3 item4 item5 item6 item7 item8 item9 cost value target

0 3031958124 85558-261955282 Alonso 85558-57439 85558-54608 85558-91361 85558-40647 85558-41305 85558-79979 85558-33076 85558-89956 85558-12554 58000 192.5 38.3

1 3031958127 85558-261955282 Jeff 85558-57439 85558-39280 85558-91361 85558-55987 85558-83083 85558-79979 85558-33076 85558-41872 85558-12554 59400 183.2 87.7

2 3031958129 85558-261955282 Mike 85558-57439 85558-39280 85558-91361 85558-55987 85558-40647 85558-79979 85558-33076 85558-88297 85558-12534 58000 101.5 18.1

...

I've tried following similar solutions online that use .map, however these examples are only for single columns whereas I am trying to sum values for 9 columns.