I can't able to list all azure vm images using Python code

I can only list specific location , offers like that..I need to list all vm images in Python script

I can't able to list all azure vm images using Python code

I can only list specific location , offers like that..I need to list all vm images in Python script

There is no list method in Python that returns all virtual machine images without using any filters such as offers or publishers. When using virtual machine images.list(), You must pass options such as location, offers, and publishers. Otherwise, it throws an error because there are not enough required parameters to get the desired outcome.

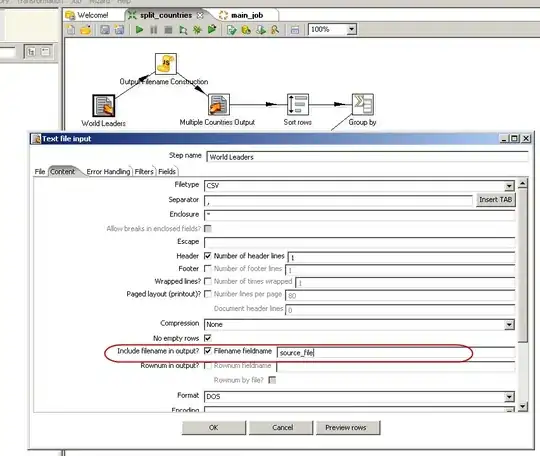

Supported list() Methods:

After workaround on this, I could be able to get the results using below script:

from itertools import tee

from azure.identity import DefaultAzureCredential

from azure.mgmt.compute import ComputeManagementClient

credentials = DefaultAzureCredential()

subscription_ID = '<subscriptionID>'

client = ComputeManagementClient(credentials, subscription_ID)

vmlist = client.virtual_machine_images.list(location="eastus")

There are no virtual machine images in my environment that match the specified filters. As an outcome, it was successfully debugged.

Output:

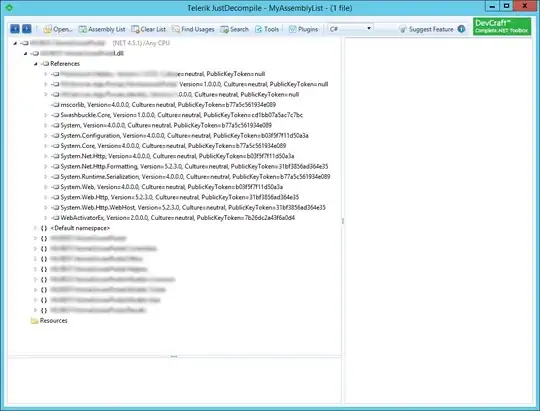

DefaultAzurecredential Auth:

Service Principal Credentials Authentication:

Note: Register a new application to get the client_ID, client_secret details under Azure Active Directory.

Refer SO by @Peter Pan.

As Jahnavi pointed out, there is no way to list all images without specifying the corresponding filters. Not all images are available in all regions and for all customers. However, if you want to list all images, you could iterate through the corresponding lists by fist listing publishers, then offers, then skus and finally images. However, there are A LOT of images, so this will take A LOT of time - and I strongly recommend to filter for at least one of the aforementioned criteria.

The below code should list all images in a given region and a given subscription. Note that it is using the AzureCliCredential class from the Azure Identity library. This requires you to be logged in to Azure through the Azure CLI and should only be used for testing. You can pick another appropriate authentication class from the library if desired.

from azure.identity import AzureCliCredential

from azure.mgmt.compute import ComputeManagementClient

credential = AzureCliCredential()

subscription_id = "{your-subscription-id}"

my_location = "{your-region}"

compute_client = ComputeManagementClient(credential=credential, subscription_id=subscription_id)

results = []

# Get all Publishers in a given location

publishers = compute_client.virtual_machine_images.list_publishers(location=my_location)

for publisher in publishers:

offers = compute_client.virtual_machine_images.list_offers(location=my_location, publisher_name=publisher.name)

for offer in offers:

skus = compute_client.virtual_machine_images.list_skus(location=my_location,publisher_name=publisher.name, offer=offer.name)

for sku in skus:

images = compute_client.virtual_machine_images.list(location=my_location, publisher_name=publisher.name, offer=offer.name, skus=sku.name)

for image in images:

image_dict = dict({

'publisherName' : publisher.name,

'offerName' : offer.name,

'skuName': sku.name,

'imageName': image.name

})

results.append(image_dict)

This will leave you with a dictionary that could be used for further processing. For example, you could load it into a Pandas DataFrame:

import pandas as pd

df = pd.DataFrame(results)

Potential output:

publisherName offerName skuName imageName

...

17101 f5-networks f5-big-ip-good f5-bigip-virtual-edition-10g-good-hourly-po-f5 16.1.202000

17102 f5-networks f5-big-ip-good f5-bigip-virtual-edition-10g-good-hourly-po-f5 16.1.300000

17103 f5-networks f5-big-ip-good f5-bigip-virtual-edition-10g-good-hourly-po-f5 16.1.301000

...

But really, this is only food for thought as it will be pretty bad performancewise and will take a lot of time - and is probably not intended to be used this way. The better option would be to filter for certain publishers first - just sayin'. :-)