I have a document that I am updating in mongodb (pymongo), like so:

collec.replace_one({"_id": id}, json.loads(json.dumps(data, cls=CustomJSONEncoder)), upsert=True)

But it returns me an error like so:

{DocumenteTooLarge}'update' command document too large

However when I run:

sys.getsizeof(json.loads(json.dumps(data, cls=CustomJSONEncoder))

It returns 232. Which should definitely not be exceeding MongoDB 16MB limit for each document right?

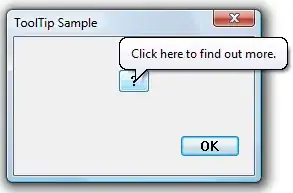

UPDATE: Added image showing evaluation of getsizeof

UPDATE 2: After doing some more debugging it is true that the data was exceeding the 16MB limit, the method replace_one was not throwing a detailed error. Rather I tested out using insert_one:

collec.insert_one(json.loads(json.dumps(data, cls=CustomJSONEncoder)))

This then threw me a more definitive error saying:

But one thing I am confused about then is the sys.getsizeof method returning 232 bytes. That should not be the case right?

Feel free to close this if is not useful.