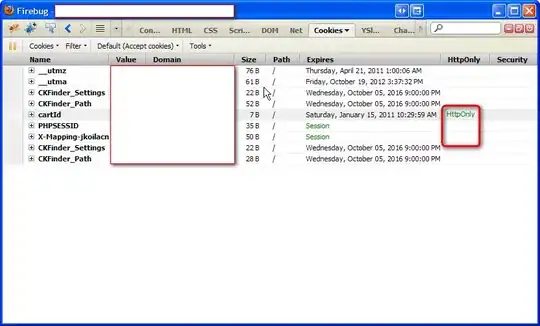

I have a list of domains that I would like to loop over and screenshot using selenium. However, the cookie consent column means the full page is not viewable. Most of them have different consent buttons - what is the best way of accepting these? Or is there another method that could achieve the same results?

urls for reference: docjournals.com, elcomercio.com, maxim.com, wattpad.com, history10.com