I'm trying to run a program external to Python with multithreading using this code:

def handle_multiprocessing_pool(num_threads: int, partial: Callable, variable: list) -> list:

progress_bar = TqdmBar(len(variable))

with multiprocessing.pool.ThreadPool(num_threads) as pool:

jobs = [

pool.apply_async(partial, (value,), callback=progress_bar.update_progress_bar)

for value in variable

]

pool.close()

processing_results = []

for job in jobs:

processing_results.append(job.get())

pool.join()

return processing_results

The Callable being called here loads an external program (with a C++ back-end), runs it and then extracts some data. Inside its GUI, the external program has an option to run cases in parallel, each case is assigned to a thread, from which I assumed it would be best to work with multithreading (instead of multiprocessing).

The script is running without issues, but I cannot quite manage to utilize the CPU power of our machine efficiently. The machine has 64 cores with 2 threads each. I will list some of my findings about the CPU utilisation.

When I run the cases from the GUI, it manages to utilize 100% CPU power.

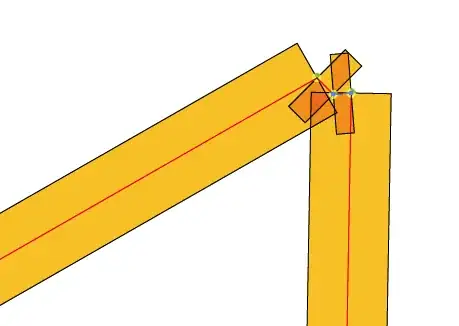

When I run the script on 120 threads, it seems like only half of the threads are properly engaged:

The external program allows me to run on two threads, however if I run 60 parallel processes on 2 threads each, the utilisation looks similar.

When I run two similar scripts on 60 threads each, the full CPU power is properly used:

I have read about the Global Interpreter Lock in Python, but the multiprocessing package should circumvent this, right? Before test #4, I was assuming that for some reason the processes were still running on cores and the two threads on each were not able to run concurrently (this seems suggested here: multiprocessing.Pool vs multiprocessing.pool.ThreadPool), but especially the behaviour from #4 above is puzzling me.

I have tried the suggestions here Why does multiprocessing use only a single core after I import numpy? which unfortunately did not solve the problem.