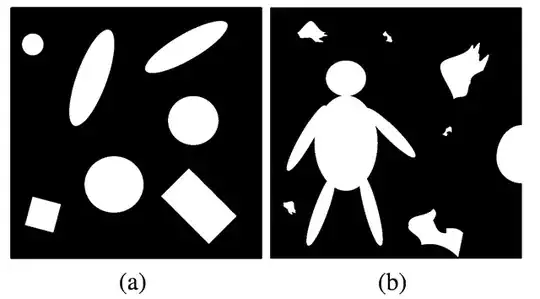

I have a numpy array with an image, and a binary segmentation mask containing separate binary "blobs". Something like the binary mask in:

I wish to extract image statistics from pixels in correspondence of each of the binary blobs, separately. These values are stored inside a new numpy array, named cnr_map.

My current implementation uses a for loop. However, when the number of binary blobs increases, it is really slow, and I'm wondering if it is possible to parallelize it.

from scipy.ndimage import label

labeled_array, num_features = label(mask)

cnr_map = np.copy(mask)

for k in range(num_features):

foreground_mask = labeled_array == k

background_mask = 1.0 - foreground_mask

a = np.mean(image[foreground_mask == 1])

b = np.mean(image[background_mask == 1])

c = np.std(image[background_mask == 1])

cnr = np.abs(a - b) / (c + 1e-12)

cnr_map[foreground_mask] = cnr

How can I parallelize the work so that the for loop runs faster?

I have seen this question, but my case is a bit different as I want to return a numpy array with the cumulative modifications of the multiple processes (i.e. cnr_map), and I don't understand how to do it.