I'm trying to create a camera remote control app with an iPhone as the camera and an iPad as the remote control. What I'm trying to do is send the iPhone's camera preview using the AVCaptureVideoDataOutput and stream it with OutputStream using the MultipeerConnectivity framework. Then the iPad will receive the data and show it using UIView by setting the layer contents. So far what I've done is this:

(iPhone/Camera preview stream) didOutput function implementation from the AVCaptureVideoDataOutputSampleBufferDelegate:

func captureOutput(_ output: AVCaptureOutput,

didOutput sampleBuffer: CMSampleBuffer,

from connection: AVCaptureConnection) {

DispatchQueue.global(qos: .utility).async { [unowned self] in

let imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer)

if let imageBuffer {

CVPixelBufferLockBaseAddress(imageBuffer, [])

let baseAddress = CVPixelBufferGetBaseAddress(imageBuffer)

let bytesPerRow: size_t? = CVPixelBufferGetBytesPerRow(imageBuffer)

let width: size_t? = CVPixelBufferGetWidth(imageBuffer)

let height: size_t? = CVPixelBufferGetHeight(imageBuffer)

let colorSpace = CGColorSpaceCreateDeviceRGB()

let newContext = CGContext(data: baseAddress,

width: width ?? 0,

height: height ?? 0,

bitsPerComponent: 8,

bytesPerRow: bytesPerRow ?? 0,

space: colorSpace,

bitmapInfo: CGBitmapInfo.byteOrder32Little.rawValue | CGImageAlphaInfo.premultipliedFirst.rawValue)

if let newImage = newContext?.makeImage() {

let image = UIImage(cgImage: newImage,

scale: 0.2,

orientation: .up)

CVPixelBufferUnlockBaseAddress(imageBuffer, [])

if let data = image.jpegData(compressionQuality: 0.2) {

let bytesWritten = data.withUnsafeBytes({

viewFinderStream?

.write($0.bindMemory(to: UInt8.self).baseAddress!, maxLength: data.count)

})

}

}

}

}

}

(iPad/Camera remote controller) Receiving the stream and showing it on the view. This is a function from StreamDelegate protocol:

func stream(_ aStream: Stream, handle eventCode: Stream.Event) {

let inputStream = aStream as! InputStream

switch eventCode {

case .hasBytesAvailable:

DispatchQueue.global(qos: .userInteractive).async { [unowned self] in

var buffer = [UInt8](repeating: 0, count: 1024)

let numberBytes = inputStream.read(&buffer, maxLength: 1024)

let data = Data(referencing: NSData(bytes: &buffer, length: numberBytes))

if let imageData = UIImage(data: data) {

DispatchQueue.main.async {

previewCameraView.layer.contents = imageData.cgImage

}

}

}

case .hasSpaceAvailable:

break

default:

break

}

}

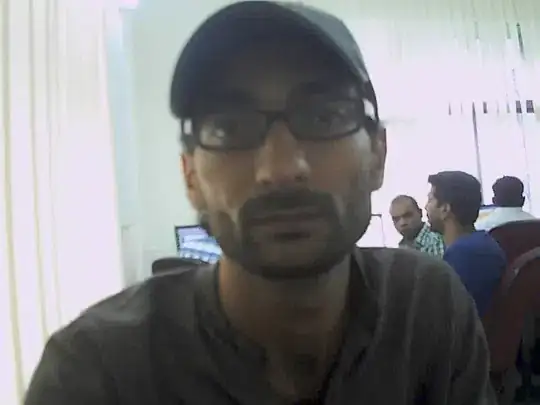

Unfortunately, the iPad did receive the stream but it shows the video data just a tiny bit of it like this (notice the view on the right, there are few pixels that shows the camera preview data on the top left of the view. The rest is just a gray color):

EDIT: And I get this warning too in the console

2023-02-02 20:24:44.487399+0700 MultipeerVideo-Assignment[31170:1065836] Warning! [0x15c023800] Decoding incomplete with error code -1. This is expected if the image has not been fully downloaded.

And I'm not sure if this is normal or not but the iPhone uses almost 100% of it's CPU power.

My question is what did I do wrong for the video stream not showing completely on the iPad? And is there any way to make the stream more efficient so that the iPhone's CPU doesn't work too hard? I'm still new to iOS programming so I'm not sure how to solve this. If you need more code for clarity regarding this, please reach me in the comments.