Consider having these Models:

public class BenchMarks{

public List<Parent> Parents { get; set; }

}

public class Parent {

public List<Child> Children { get; set; }

}

public class Child {

public string Name { get; set; }

public int Value { get; set; }

}

and the class BenchMarks also has these methods:

public void GetWithTwoForeach(){

var dict = new Dictionary<string,int>();

foreach(var parent in Parents){

foreach(var child in parent.Children){

if (dict.ContainsKey(child.Name))

dict[child.Name] += child.Value;

else

dict.Add(child.Name, child.Value);

}

}

}

public void GetWithOneForeach(){

var dict = new Dictionary<string,int>();

foreach(var child in Parents.SelectMany(p=>p.Children)){

if (dict.ContainsKey(child.Name))

dict[child.Name] += child.Value;

else

dict.Add(child.Name, child.Value);

}

}

public void GetWithGroupSum(){

var childGroups = Parents.SelectMany(p=>p.Children).GroupBy(c => c.Name);

var dict = childGroups.ToDictionary(cg => cg.Key, cg => cg.Sum(child => child.Value));

}

Than why is it that the nested foreach loop outperforms the other two methods by more than 100%?

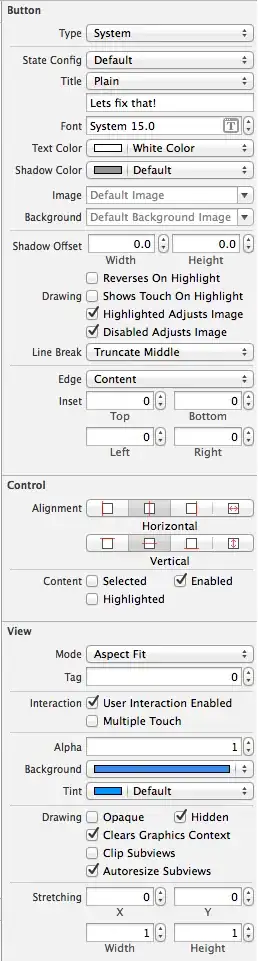

Benchmark fiddle

Edit My benchmark was indeed affected by the ordered nature of my test data.

When I changed it to this (I was not able to get this to work in the .NET fiddle)

public void Setup()

{

Parents = new List<Parent>();

var names = new List<string>{"Tigo","Edo","Kumal","Manu","Guido","Anne","Steff","Mimi","Suzan","Jeff"};

var random = new Random();

for (var i = 0; i < 200; i++)

{

int index = random.Next(names.Count);

Parents.Add(new Parent

{

Children = new List<Child>

{

new Child

{

Name = names[index],

Value = i

}

}

});

}

}

The benchmark came out a bit less dramatic, but still the same conclusion that LINQ adds overhead as mentioned in comments