Theoretically, p-values are uniformly distributed under the null hypothesis.

Therefore, I would expect p-values from G-test or Chi-square test to test equal proportions to provide uniformly distributed p-values when I apply it to some random coin flip simulations using Python's random.randint(0,1), which should be an unbiased random coin, i.e., a Bernoulli(0.5).

Likewise, in case n*p is sufficiently large, the assumptions behind a t-test become reasonable, and we would expect a t-test to give uniformly distributed p-values too.

However, that is not what I empirically see.

I plot a histogram of p-values from repeated experiments with sample size 20k, using the following snippet:

from scipy import stats

from matplotlib import pyplot as plt

ps = []

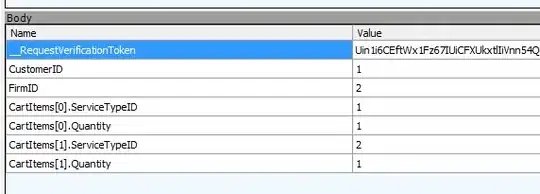

for i in range(5000):

heads = [random.randint(0,1) for _ in range(20000)]

tails = [1-x for x in heads]

p = stats.ttest_ind(heads, tails).pvalue

ps.append(p)

plt.hist(ps, 100)

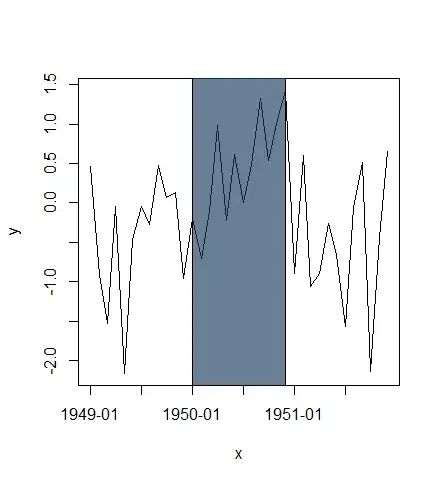

This results in the following distribution of p-values, which seems to give p-values close to 0 much more often than expected. Note that this is not due to the approximations of the t-test, as I find similar distributions of p-values when I plug in a Chi-square or G-test.

Am I running into a situation here where Python's pseudorandom number generator (which are based on Mersenne Twister algorithm) simply do not have sufficiently good statistical properties and are simply not random enough? Or is there something else that I am missing here?