I created my own benchmark tool for finding about possible limitations.

Link to Github repo is here.

TL;DR:

As suspected by @OneCricketeer, the scalability factor is the ~ nr of schemas * size of avg schema. I created a tool to see how the registry memory and cpu usage scales for registration of many different AVRO schemas of the same size (using a custom field within the schema to differentiate them).

I ran the tool for ~48 schemas, for that ~900 MB of memory where used with low cpu usage.

Findings:

- The ramp up of memory usage is a lot higher in the beginning. After the intial ramp up, the memory usage increases step-wise when new memory is allocated to hold more schemas.

- Most of the memory is used for storing the schemas in the ConcurrentHashMap (as expected).

- The CPU usage does not change significantly with many schemas - also not the the time to retrieve a schema.

- There is a cache for holding RawSchema -> ParsedSchema mappings (var

SCHEMA_CACHE_SIZE_CONFIG, default 1000), but at least in my tests I could not see negative impact for a cache miss, it was both in hit and miss ~1-2ms for retrieving a schema.

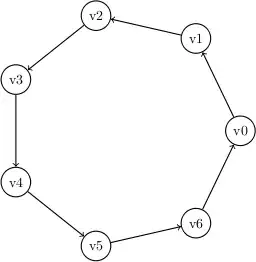

Memory usage (x scale = 100 schemas, y scale = 1 MB):

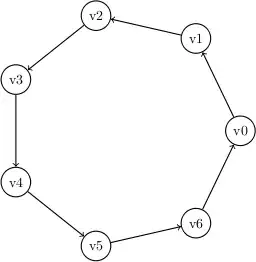

CPU usage (x scale = 100 schemas, y scale = usage in %):

Top 10 objects in Java heap:

num #instances #bytes class name (module)

-------------------------------------------------------

1: 718318 49519912 [B (java.base@11.0.17)

2: 616621 44396712 org.apache.avro.JsonProperties$2

3: 666225 15989400 java.lang.String (java.base@11.0.17)

4: 660805 15859320 java.util.concurrent.ConcurrentLinkedQueue$Node (java.base@11.0.17)

5: 616778 14802672 java.util.concurrent.ConcurrentLinkedQueue (java.base@11.0.17)

6: 264000 12672000 org.apache.avro.Schema$Field

7: 6680 12568952 [I (java.base@11.0.17)

8: 368958 11806656 java.util.HashMap$Node (java.base@11.0.17)

9: 88345 7737648 [Ljava.util.concurrent.ConcurrentHashMap$Node; (java.base@11.0.17)

10: 197697 6326304 java.util.concurrent.ConcurrentHashMap$Node (java.base@11.0.17)