Function ReduceToRREF(matrixRange As Range) As Variant

Dim matrix As Variant

Dim rowCount As Long

Dim colCount As Long

Dim lead As Long

Dim r As Long

Dim c As Long

Dim i As Long

Dim multiplier As Double

matrix = matrixRange.Value

rowCount = UBound(matrix, 1)

colCount = UBound(matrix, 2)

lead = 1

For r = 1 To rowCount

If colCount < lead Then Exit For

i = r

While matrix(i, lead) = 0

i = i + 1

If rowCount < i Then

i = r

lead = lead + 1

If colCount < lead Then Exit For

End If

Wend

If i <> r Then

For c = lead To colCount

matrix(r, c) = matrix(r, c) + matrix(i, c)

Next c

End If

multiplier = matrix(r, lead)

For c = lead To colCount

matrix(r, c) = matrix(r, c) / multiplier

Next c

For i = 1 To rowCount

If i <> r Then

multiplier = matrix(i, lead)

For c = lead To colCount

matrix(i, c) = matrix(i, c) - multiplier * matrix(r, c)

Next c

End If

Next i

lead = lead + 1

Next r

ReduceToRREF = matrix

End Function

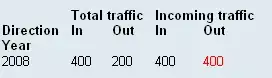

I thought this was a great solution, and it does seem to work properly in most cases. However, I've run into an example where it fails:

This:

Any ideas on what might be going wrong?

I also tried taking the RREF of just the first three rows of the matrix, and that works as expected. What's going on?