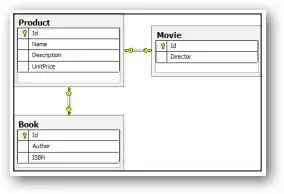

I have a table which has data as shown in the diagram . I want to create store results in dynamically generated data frame names.

For eg here in the below example I want to create two different data frame name dnb_df and es_df and store the read result in these two frames and print structure of each data frame

When I am running the below code getting the error

SyntaxError: can't assign to operator (TestGlue2.py, line 66)

import sys

import boto3

from awsglue.transforms import *

from awsglue.utils import getResolvedOptions

from pyspark.context import SparkContext

from awsglue.context import GlueContext

from awsglue.job import Job

from awsglue.dynamicframe import DynamicFrame

from pyspark.sql.functions import regexp_replace, col

args = getResolvedOptions(sys.argv, ['JOB_NAME'])

sc = SparkContext()

#sc.setLogLevel('DEBUG')

glueContext = GlueContext(sc)

spark = glueContext.spark_session

#logger = glueContext.get_logger()

#logger.DEBUG('Hello Glue')

job = Job(glueContext)

job.init(args["JOB_NAME"], args)

client = boto3.client('glue', region_name='XXXXXX')

response = client.get_connection(Name='XXXXXX')

connection_properties = response['Connection']['ConnectionProperties']

URL = connection_properties['JDBC_CONNECTION_URL']

url_list = URL.split("/")

host = "{}".format(url_list[-2][:-5])

new_host=host.split('@',1)[1]

port = url_list[-2][-4:]

database = "{}".format(url_list[-1])

Oracle_Username = "{}".format(connection_properties['USERNAME'])

Oracle_Password = "{}".format(connection_properties['PASSWORD'])

#print("Oracle_Username:",Oracle_Username)

#print("Oracle_Password:",Oracle_Password)

print("Host:",host)

print("New Host:",new_host)

print("Port:",port)

print("Database:",database)

Oracle_jdbc_url="jdbc:oracle:thin:@//"+new_host+":"+port+"/"+database

print("Oracle_jdbc_url:",Oracle_jdbc_url)

source_df = spark.read.format("jdbc").option("url", Oracle_jdbc_url).option("dbtable", "(select * from schema.table order by VENDOR_EXECUTION_ORDER) ").option("user", Oracle_Username).option("password", Oracle_Password).load()

vendor_data=source_df.collect()

for row in vendor_data :

vendor_query=row.SRC_QUERY

row.VENDOR_NAME+'_df'= spark.read.format("jdbc").option("url",

Oracle_jdbc_url).option("dbtable", vendor_query).option("user",

Oracle_Username).option("password", Oracle_Password).load()

print(row.VENDOR_NAME+'_df')