According to the top command the Java/Tomcat process is using 77.9% of available memory (32GB physical * 77.9% = 25.32GB):

| TYPE | SIZE |

|---|---|

| VIRT: | 32.5 GB |

| RES: | 24.5 GB |

| SHR: | 24.9 MB |

The JVM -Xmx option has been set for this application to permit the JVM 12GB, which seems to be respected.

However, our monitoring tools keep setting off alarm bells as memory usage on the box slowly increments towards the physical limit and then we have to restart Tomcat to bring it back down.

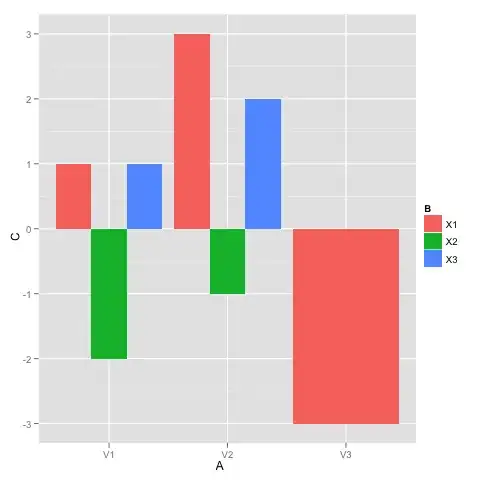

The 12 GB JVM maximum is also reflected in the blue/green Heap diagram provided by JProfiler. The other diagram provided by JProfiler shows 280.7 MB of non-heap memory allocated.

None of the instrumentation in JProfiler seems to help explain the gap between the 12 GB JVM maximum and the 25 GB or more reported by top.

JProfiler consistently shows Heap memory usage hovers between 4 GB and 7 GB.

I'm considering profiling with a Linux-native memory profiler (Valgrind) to see if that can reveal more information but are there any other features in JProfiler that could help explain the gap?

I've used the JProfiler tools: Heap Walker, Live Memory and allocation recorder but the application's memory usage seems healthy and unremarkable.

It's definitely a powerful tool, which makes me wonder what I'm missing here.

Other things I've tried include looking at proc/PID/maps | wc -l for the same process - out of a max_map_count of 65,530, the process is using 845. This seems to indicate that even if memory-mapped files may be involved, the number is well below the max. I'm just not sure how to determine how much total memory space that might be taking up.

I also compared top with atop which is consistent.

I also ran pmap against the process but wasn't sure how to interpret the massive text dump that it produces. For that reason, I'm hoping Valgrind is more human-friendly.