I'm running GKE Autopilot cluster with ASM feature.

The cluster is for development environment so I want to curve maintenance cost as cheap as possible.

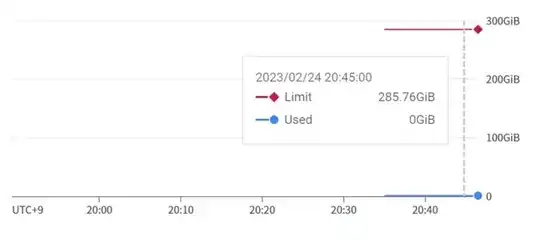

Because of enabling istio-injection, every pod in the cluster has istio-proxy but the proxy requests nearly 300GiB disk even setting for pod ( from get pod -o yaml) request 1GiB or so.

kubectl get pod <pod-name> -o yaml

...

resources:

limits:

cpu: 250m

ephemeral-storage: 1324Mi

memory: 256Mi

requests:

cpu: 250m

ephemeral-storage: 1324Mi

memory: 256Mi

...

Is the nearly 300GiB disk request is needed for run ASM? Or can I reduce this?

[edited 2023-03-01]

To reproduce this, deploy a yaml below to GKE cluster with ASM. In this case, default namespace must be labeled to use istio-injection.

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-test

service: nginx-test

name: nginx-test

spec:

ports:

- name: http

port: 80

selector:

app: nginx-test

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-test

name: nginx-test

spec:

replicas: 1

selector:

matchLabels:

app: nginx-test

template:

metadata:

labels:

app: nginx-test

annotations:

sidecar.istio.io/proxyCPU: 250m

sidecar.istio.io/proxyMemory: 256Mi

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx-test

ports:

- containerPort: 80