I am trying to learn how to use to Microbenchmark Functions within R.

As an example, I simulate a few random datasets of different sizes:

# load the lubridate package

library(lubridate)

library(microbenchmark)

library(forecast)

my_list = list()

index = c(100, 1000, 10000, 50000, 100000, 250000, 500000, 750000, 1000000)

for (i in 1:length(index))

{

my_data_i = data.frame(dates = sample(seq(as.Date('2010/01/01'), as.Date('2023/01/01'), by="day"), replace = TRUE, index[i]), visits = 1)

my_list[[i]] = my_data_i

}

I then created a function that I want to repeatedly measure on each dataset:

my_function = function(){

# aggregate the data by week

my_data_i_weekly <- aggregate(my_data_i$visits, list(week = week(my_data_i$dates), year = year(my_data_i$dates)), sum)

# convert the data frame to a time series

my_data_i_ts <- ts(my_data_i_weekly$x, start = c(min(my_data_i_weekly$week), min(my_data_i_weekly$year)), frequency = 52)

# fit an ARIMA model using auto.arima

my_data_i_arima <- auto.arima(my_data_i_ts)

}

In the past, I would have manually timed each iteration - for example:

results = list()

for (i in length(index))

{

start.time_i <- Sys.time()

my_data_i = my_list[[i]]

print(replicate(n = 100, my_function())

end.time_i <- Sys.time()

time.taken_i <- end.time_i - start.time_i

results[[i]] = time_taken_i

}

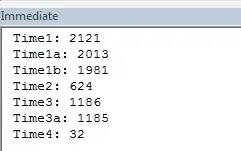

Now, I am trying to learn how to do this using the "microbenchmark" function in R.

my_list2 = list()

for (i in 1:length(index))

{

my_data_i = my_list[[i]]

res_i = microbenchmark(my_function(), times = 100)

print(res_i)

my_list2[[i]] = res_i

}

To recap - I am trying to do the following:

- Run "my_function()" on my_data[1] 100 times and record how long it took

- Run "my_function()" on my_data[[2]] 100 times and record how long it took

- etc.

Am I doing this correctly?

Thanks!

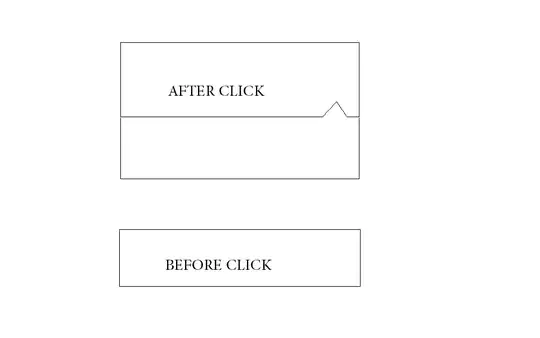

Note: In the future, I would like to make a graph like this (e.g. Red Line - Computer 1, Green Line - Computer 2) :