I have the following code:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver = webdriver.Chrome('C:\chromedriver_win32\chromedriver.exe')

global_dynamicUrl = "https://draft.shgn.com/nfc/public/dp/788/grid"

driver.get(global_dynamicUrl)

wait = WebDriverWait(driver, 15)

table_entries = wait.until(EC.visibility_of_all_elements_located((By.CSS_SELECTOR, "table tr")))

print(len(table_entries))

print(table_entries)

html = driver.page_source

open('site.txt', 'wt').write(html)

with open('site.txt', encoding='utf-8') as f:

lines = f.read()

driver.close()

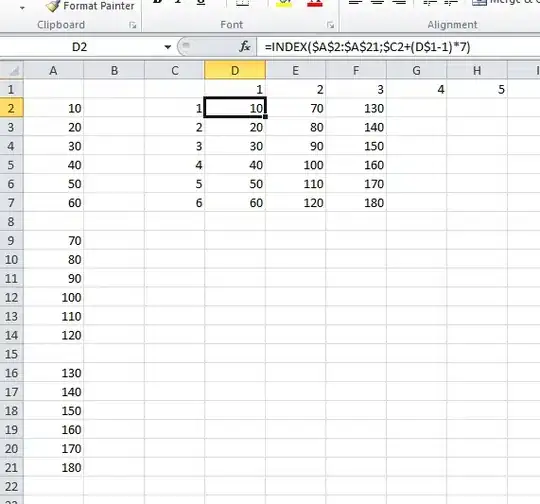

The resulting scrape is saved in a text file for further analysis. The problem is that when checking or parsing what was scraped, there is no trace of the names that appear in the rendered webpage (you can check the pic). What could I be doing wrong?