I'm designing a shortest-path algorithm in Spark, using Pregel. I want to partition my graph and try out which partitioning strategy that works best. To do this, I have built a for-loop that is running a strategy five times, recording run-time then proceeds to next strategy, this to get an average runtime of each strategy. But, after 5-6 runs, jobs are starting to back up, forming a queue. The loop is still running and proceeds but runtime starts to increase, until the runtime is too long and memory gets full. Why are some jobs backing up and never finished? And why isn't the jobs killed when a new strategy is started?

Here is the loop in my code:

var (sc, graph) = loadNetwork()

val partitionStrategies = List(RandomVertexCut, EdgePartition2D, EdgePartition1D, CanonicalRandomVertexCut)

for (i <- 0 to 3) {

println(partitionStrategies(i).toString)

for (j <- 0 to 4) {

val (time, sc2) = Pregel(graph.partitionBy(partitionStrategies(i)), 299502025, 299502038, sc)

sc = sc2

sc.cancelAllJobs()

println("Elapsed time = " + time)

}

}

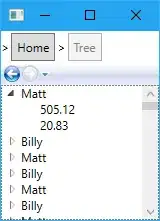

I insert two images of the SparkUI job page. As you can see, the Job ID timeline is broken, some jobs are completed and some is still running.

I've tried to input a SparkContext.cancelAllJobs() before entering a new loop but it seems to do nothing.

I can insert the Pregel algorithm as well if someone is interested.