So I was trying to run multiple fetch requests in parallel like this:

fetch(

`http://localhost/test-paralell-loading.php?r`

);

fetch(

`http://localhost/test-paralell-loading.php?r`

);

fetch(

`http://localhost/test-paralell-loading.php?r`

);

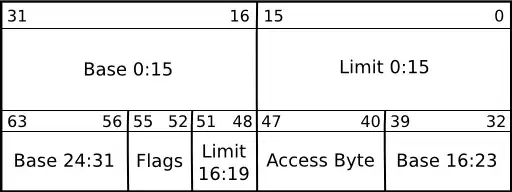

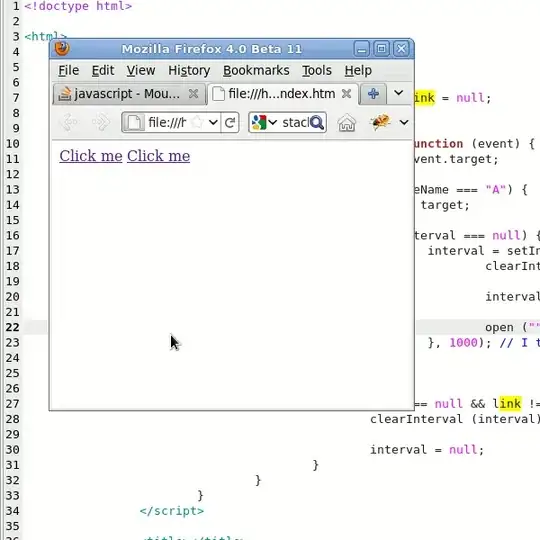

But they are unexpectedly running in sequence:

Is this an HTTP 1.1 limitation? How can I overcome it?

Update:

seems like that is on chrome, o firefox it behaves differently:

Is there something that can be made to improve the peformance on chrome browsers?