I have a model and other folders in the gcs bucket where I need to download into my local. I have the service account of that gcs bucket as well.

gs bucket: gs://test_bucket/11100/e324vn241c9e4c4/artifacts

Below is the code I am using to download the folder

from google.cloud import storage

storage_client = storage.Client.from_service_account_json(

'service_account.json')

bucket = storage_client.get_bucket(bucket_name)

blobs_all = list(bucket.list_blobs())

blobs = bucket.list_blobs(prefix='artifacts')

for blob in blobs:

if not blob.name.endswith('/'):

print(f'Downloading file [{blob.name}]')

blob.download_to_filename(f'./{blob.name}')

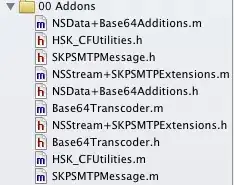

The above code is not working properly. I want to download all the folders that are saved in the artifacts folder (confusion_matrix, model and roc_auc_plot) in google bucket. Below images shows the folders

Can anyone tell me how to download those folders?