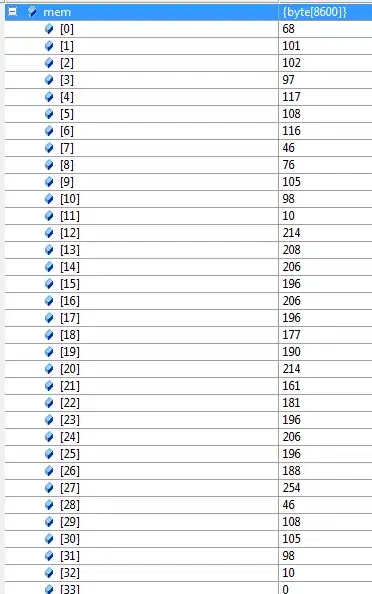

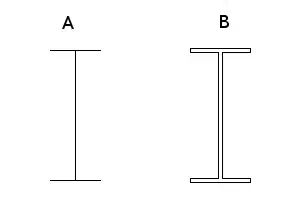

I want to code a GPT-like transformer for a specific text generation task. GPT-like models use only the decoder block (in stacks) [1]. I know how to code all sub-modules of the decoder block shown below (from the embedding to the softmax layer) in Pytorch. However, I don't know what I should give as input. It says (in the figure) "Output shifted right".

For example, this is my data, (where < and > are sos and eos tokens):

- < abcdefgh >

What should I give to my GPT-like model to train it properly?

Also, since I am not using a encoder, should I still give input to the multihead attention block?

Sorry if my questions seem a little dumb, I am so new to transformers.