TL;DR: the performance gap is due to a complex low-level effect related to how modern processors execute instructions. More precisely, the performance is dependent to the number of store-forwarding operations that can be executed in parallel regarding the number of objects in the target list : having more objets results in more independent instructions so more instructions that can be executed in parallel and a smaller critical path of the dependency chain of the instructions to be executed.

To understand what is going on, we first need to analyse what is the assembly code executed by the processor. We also need to understand quite well how modern processors work. Finally, a a deep analysis can help us to untangle what is precisely going on.

Configuration & reproducibility

On my machine, I am able to reproduce the problem on Windows 10 using CPython 3.8.1, a i5-9600KF processor and two RAM DIMM @ 3200 MHz (CL16). Here are the timings:

1.35 µs ± 51.7 ns per loop (mean ± std. dev. of 1000 runs, 2000 loops each)

1.24 µs ± 50.6 ns per loop (mean ± std. dev. of 1000 runs, 2000 loops each)

1.41 µs ± 54 ns per loop (mean ± std. dev. of 1000 runs, 2000 loops each)

2.49 µs ± 89.8 ns per loop (mean ± std. dev. of 1000 runs, 1000 loops each)

High-level profiling & executed assembly code

In all cases, two functions take most of the computing time. The two functions contains a hot loop taking almost all the execution time of the benchmark. Here is the assembly code of the slowest one (~53% of the time):

0x180053f50 Block 10:

0x180053f50 mov rcx, qword ptr [rdx+rax*1]

0x180053f54 lea rax, ptr [rax+0x8]

0x180053f58 inc rbx

0x180053f5b inc qword ptr [rcx]

0x180053f5e mov qword ptr [rax-0x8], rcx

0x180053f62 cmp rbx, rdi

0x180053f65 jl 0x18053f50 <Block 10>

For those who are not very familiar with the assembly language, here is a C-like code doing a similar operation:

uint64_t rdx = ...;

uint64_t rax = ...;

for(uint64_t rbx=0 ; rbx<rdi ; ++rbx)

{

uint64_t* rcx = *(uint64_t*)(rdx+rax); // Read an array item (list reference?)

rax += 8;

(*rcx)++; // Increment the number of reference of the object?

*(uint64_t*)(rax-8) = rcx; // Write the item in another array (list reference?)

}

And here is the one of the faster one (~37% of the time):

0x180058691 Block 11:

0x180058691 mov rbx, qword ptr [rsi+0x18]

0x180058695 mov rbx, qword ptr [rbx+rdi*8]

0x180058699 test rbx, rbx

0x18005869c jz 0x1800586a8 <Block 13>

0x18005869e Block 12:

0x18005869e sub qword ptr [rbx], 0x1

0x1800586a2 jz 0x180058764 <Block 26> (almost no jump: block returning

the result of the function)

0x1800586a8 Block 13:

0x1800586a8 sub rdi, 0x1

0x1800586ac jns 0x180058691 <Block 11>

[...]

Again, here is a similar C-like code to better understand what the hot loop actually does:

for(uint64_t rdi=... ; rdi>0 ; --rdi)

{

uint64_t* tmp = *(uint64_t*)(rsi + 0x18);

uint64_t* rbx = tmp[rdi];

if(rbx != NULL)

{

// rbx is certainly a pointer to an object and

// *rbx is certainly the number of references.

// If there is no reference on the object,

// then we (temporary?) stop the loop

// (very rare case in practice).

if(--(*rbx) == 0)

goto Block26;

}

}

Block26:

// Make few checks and call a function which

// certainly deallocates the object.

// Can jump back in the loop or stop the functions

// regarding a complex set of conditional branches.

Note that most of the remaining time (~10%) is spent in a CancelloEx function certainly (indirectly) called by CPython in order to track interruptions in the middle of the computation (so that a Ctrl+C can quickly stop the computation).

When the number of items in the repeated list is smaller, few instructions are becoming significantly slower than the others and they are clearly becoming the bottleneck.

Overview of how modern processors work

Before trying to analysis what is happening when the processor execute this code, we need to understand few things about how modern mainstream processors works. Modern x86-64 processors are able to execute several instructions at the same time thanks to the instruction-level parallelism (ILP), combined with an out-of-order execution (OoO). Instructions that can be executed in parallel independently of others are very cheap while the one on a dependency chain are much more expensive, especially when they are interacting with the memory hierarchy (due to the relatively high latency of the memory operations).

In fact, this is a bit more complex since instructions are not directly executed : they are split into one or multiple micro-instructions (uops). Some instructions can be macro-fused so they are read by the processor as a unique big instruction. Some micro-instruction can be fused too.

The uops are scheduled on multiple computing units (ports) that can compute them in parallel. Some ports are specialized to execute some specific instructions (e.g. only branches, only memory reads).

Conditional branches having a predictable behaviour can be predicted by the processor so a jump is nearly free in this case, especially when it jumps to a close location (and the target instructions are already in the instruction cache).

Low-level profiling

We can use precise hardware performance counters combined with a sampling-based profiler so to find out which instruction is actually the bottleneck in each case (VTune on Windows and Perf on Linux are able to do that).

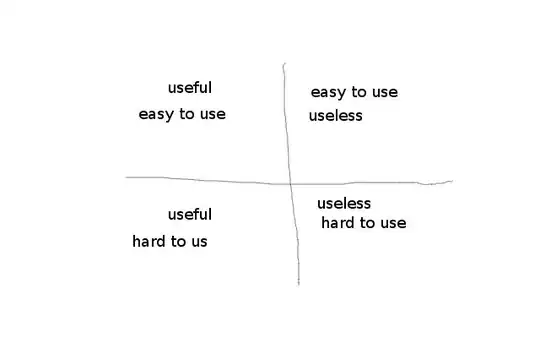

Here is the result in the first case (fast):

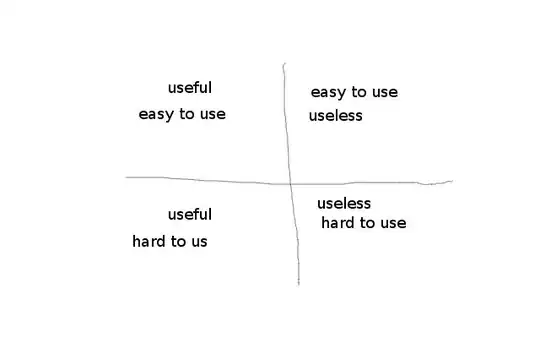

Here is the result in the last one (slow):

I put in blue the instructions that are the slowest in the two loop (by a far margin). The cost of the instruction is provided on the right. The absolute timings are not really important : what mainly matters is the time taken by an instruction relative to the others.

Analysis of the low-level profiling results

In the slowest function, we can see that two instructions are the bottleneck, especially in the last benchmark : inc rbx and mov qword ptr [rax-0x8], rcx. inc rbx is generally cheap (1 cycle) but is on the critical path of the dependency chain. Thus, it can limit the speed of the loop regarding the scheduling of the uops on the available ports. That being said, there is no reason for this instruction to be a bigger issue in the last benchmark. In fact, the relative time taken by this instruction is smaller in the last benchmark since it is slower. However, the second instruction is getting relatively much slower : this is the real bottleneck of this function.

One should remember that processors execute instructions in parallel with a (very large) OoO window. Thus, in practice an instruction can be reported as being one on which the processor stall while it is actually bounded by the previous dependent instruction. As a result, inc qword ptr [rcx] can be responsible for the other to be slow. In fact, regarding the above reverse-engineered C code, we can deduce that rcx is a pointer to one of the object coming from the initial repeated list. The thing is incrementing the same variable in the same cache line 4 times is slower than incrementing 4 different variables, especially if they are located on 4 different cache lines on modern x86-64 processors (at least Intel ones). This is because the L1 cache has a latency of typically 3-4 cycles and the latency has to be paid to read the value, and then to write it, read it again and so on.

Note though that modern x86-64 processors are able to read a variable faster from the cache if it has been written recently. Indeed, the processor can detect the dependency and they can transfer the value from a register to another rather than reloading data from the cache so to reduce the latency of the write + read. This operation called store-forwarding is not free though. AFAIK, the latency of such an operation is of typically 4-5 cycles on recent Intel processors so it just mitigate the overheads but the latency is large enough to be the main bottleneck (for this function). For more information please read this post about caches and the store-forwarding or even this great article about store-forwarding.

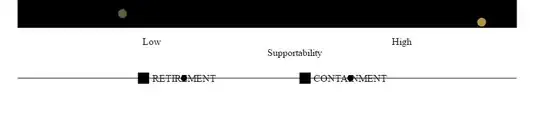

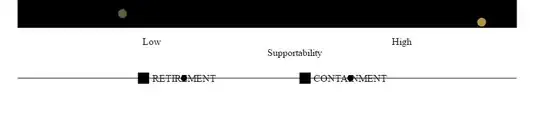

Regarding the second function, we can use the same logic and conclude that jz 0x180058764 <Block 26> is the main bottleneck certainly because of the sub qword ptr [rbx], 0x1 which is also expensive due to the same reason: the amount of parallelism of the store-forwarding operations limit the speed of the loop. When there is only one object in the initial list, the critical path of the dependency chain of the instructions to execute is much longer.

Note that a 5 cycle loop iteration done twice results in a 2*5*1000/4.5e9 = 2.22 µs time. This is consistant with the experimental results of the last benchmark (one object). Note the other instructions can be mostly executed in parallel (i.e. we cannot simply add timings). With two objects, the time is 1.11 µs and the processor can better overlap the latency with many instructions (though it is not perfect). With 4 objets or more, the latency of the store-forwarding should be mostly overlapped with other instructions. This explain why the low-level profiling results show more instructions being the ones one which the processor stalls. The stores are still relatively expensive since my processor can only execute 1 store per cycle, not to mention the processor track the dependency with all other reads in the loop (and use speculation for this to be fast).