You need to detect the contact areas first, then limit the other detections to be within those areas

So I take the advice from the author of diplib, also I enhance the light source and change the background from white to black. The following is what I did so far

import diplib as dip

import math

img = dip.ImageRead('my2.png')

obj = dip.Threshold(img)[0]

dip.viewer.Show(obj)

obj3 = dip.Erosion(obj, 6)

# This operator stablize the ultimate result tremendously

obj3 = dip.ClosingByReconstruction(obj3, 6)

dip.viewer.Show(obj3)

obj4 = img.Copy()

obj4.Mask(obj3)

dip.viewer.Show(obj4)

lab = dip.Label(obj3, minSize = 1700, maxSize = 6000)

msr = dip.MeasurementTool.Measure(lab,img,['Size'])

print(msr)

local_height = dip.Tophat(obj4, 9)

# I don't know why Tophat produce some strange inselbergs, so filter them all

mask = local_height < 100

dip.viewer.Show(mask)

local_height = local_height.Copy()

local_height.Mask(mask)

dip.viewer.Show(local_height)

inselbergs = dip.HysteresisThreshold(local_height, 20, 50)

dip.viewer.Show(dip.Overlay(obj4, inselbergs))

labels = dip.Label(inselbergs, minSize = 3, maxSize = 16)

dip.viewer.Show(dip.Overlay(obj4, labels))

# The result is greatly improved, but I still have to find the pre-punched holes as the mask to filter out the glitchs near them

dip.ImageWrite(dip.Overlay(obj4, labels), 'out3.jpg')

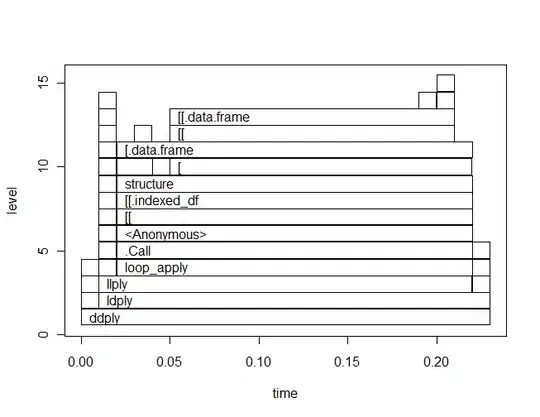

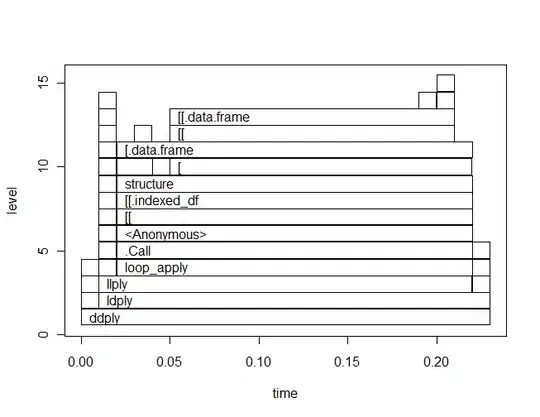

This result is not bad, although extra work still needed to filter out the spots near the pre-punched hole area. Diplib is awesome,provide the nice abstraction to manipulate images.But at the price of relative slow processing time compare to OpenCV

At last, I leave some code to find the pre-punched holes mask

import diplib as dip

import math

img = dip.ImageRead('my2.png')

# Extract object

obj = dip.Threshold(img)[0]

dip.viewer.Show(obj)

obj3 = dip.Dilation(obj, 5)

dip.viewer.Show(~obj3)

obj4 = dip.EdgeObjectsRemove(~obj3)

dip.viewer.Show(obj4)

lab = dip.Label(obj4, minSize = 500, maxSize = 700)

dip.viewer.Show(lab)

msr = dip.MeasurementTool.Measure(lab,img,['Size'])

print(msr)

I am a Haskeller, ultimately I would like to integrate the code to my own

machine vision framework. So I rewrite the algorithm in C++ so that I can easily integrated using inline-c-cpp framwork

#include "diplib.h"

#include "dipviewer.h"

#include "diplib/simple_file_io.h"

#include "diplib/linear.h" // for dip::Gauss()

#include "diplib/segmentation.h" // for dip::Threshold()

#include "diplib/regions.h" // for dip::Label()

#include "diplib/measurement.h"

#include "diplib/mapping.h" // for dip::ContrastStretch() and dip::ErfClip()

#include "diplib/binary.h"

#include "diplib/testing.h"

#include "diplib/generation.h"

#include "diplib/statistics.h"

#include "diplib/morphology.h"

#include "diplib/detection.h"

#include "diplib/display.h"

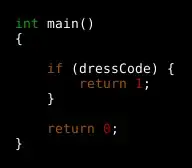

int main() {

dip::testing::Timer timer;

dip::Image circle;

timer.Reset();

auto a = dip::ImageRead("./my2.jpg");

dip::Image b, c, d, e, f, g, labels;

dip::Threshold(a, b);

c = dip::ClosingByReconstruction(b,6);

d = dip::Erosion(c,1);

f = a.Copy();

f.At(~d).Fill(0);

e = dip::FrangiVesselness(f, {0.67});

dip::viewer::Show(e);

g = dip::HysteresisThreshold(e,0.12,0.4);

dip::viewer::Show(g);

labels = dip::Label(g, 0, 3, 30);

dip::MeasurementTool measurementTool;

auto msr = measurementTool.Measure(labels, f, {"Radius"});

std::cout << msr << '\n';

dip::Image feature = dip::ObjectToMeasurement(labels, msr[ "Radius" ]);

// filter out extra regions through Radius.StdDev

labels.At( feature[3] > 0.0002 ) = 0;

dip::viewer::Show(dip::Overlay(a,labels));

dip::viewer::Spin();

return 0;

}

The following is the C++ make file

cmake_minimum_required(VERSION 2.8)

project( detection )

find_package( DIPlib REQUIRED )

include_directories( ${OpenCV_INCLUDE_DIRS} )

add_executable( detection detection.cpp )

target_link_libraries( detection DIP DIPviewer ${OpenCV_LIBS} )