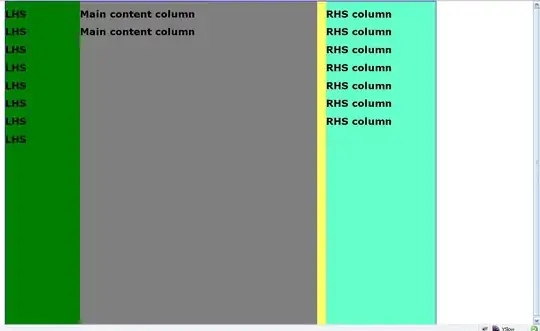

During execution of a php webservice, i need to detach a "time consuming function" to maintain a quick response to the user. That's an example of the flow :

I know that php does not manage multi-thread operation by itself, but anyway there are some other methods to works in async way.

Googling and here on stackoverflow i have found a possible solution in the shell_exec command.

i have create this example to simulate the flow:

service.php

$urlfile=dirname(__FILE__)."/timefile.php";

$cmd = 'nohup nice -n 10 php -f '.$urlfile.' > log/file.log & printf "%u" $!';

$pid = shell_exec($cmd);

echo $pid;

timefile.php

sleep(10);

$urlfile=dirname(__FILE__)."/logfile.txt";

$myfile = fopen($urlfile, "a");

$txt = time()."\n";

fwrite($myfile, $txt);

fclose($myfile);

The code is only an example ( it writes into a txt file ) and it works asynchronous.

The flow will be upload on a Centos server with php and mysql installed. In real life timefile.php will execute from 3 to 6 curl call, i can estimate an execution time (for the entire file) of 30 seconds. Timefile.php will not act as a daemon, it will executed without only one time when it is called, so it will not remain open with a infinite while loop for instance.

Obviously the performance will depends on the server details, but my question is a little bit different. The webservice ( i hope ) will be call many times ( i can estimate 1000 times each hour ) and maybe there will be also concurrency troubles.

My code works as expected ,so my question is :

Using this approach ( with shell_exec as the example shows ) i will have performance trouble in server caused by the high number of detached process opened ?