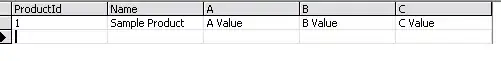

Suppose I have the following data:

library(forecast)

library(lubridate)

set.seed(123)

weeks <- rep(seq(as.Date("2010-01-01"), as.Date("2023-01-01"), by = "week"), each = 1)

counts <- rpois(length(weeks), lambda = 50)

df <- data.frame(Week = as.character(weeks), Count = counts)

# Convert Week column to Date format

df$Week <- as.Date(df$Week)

# Create a time series object

ts_data <- ts(df$Count, frequency = 52, start = c(year(min(df$Week)), 1))

I am trying to learn how to use "Rolling Cross Validation" for Time Series Models (e.g. ARIMA) in R.

As I understand, this involves (ordering the data in chronological order):

- Fit a model to the first 60 data points, predict the next 5 and record the error

- Next, fit a model model to the first 65 points, predict the next 5 and record the error

- etc.

Previously, I had tried to write the R code myself for implementing this procedure (Correctly understanding loop iterations) - however, this appeared to be difficult and I am now interested in seeing if there are any ready-made implementations of such a procedure.

While looking on the internet, I found the following function that I think might be able to accomplish the desired task : https://search.r-project.org/CRAN/refmans/forecast/html/tsCV.html

I tried to run this function on my data:

# note: I am specifically interested in using the auto.arima() function

far2 <- function(x, h){forecast(auto.arima(x), h=h)}

e <- tsCV(ts_data, far2, h=5)

The code seems to be running - But I am not sure if I am doing this correctly.

For example:

Is this code fitting a time series model on the first 5 points, predicts next 5, record error - then fits model on first 10 points, predicts next 5, record error, etc.?

From here, when the code finishes running - do I need to manually calculate the MAE and RMSE errors myself?

Can someone please comment on this?

Thanks!

Note: Is it better to use something like this https://business-science.github.io/modeltime.resample/articles/getting-started.html ?