I have extracted from the last layer and the last attention head of my BERT model the attention score/weights matrix. However I am not too sure how to read them. The matrix is the following one. I tried to find some more information in the literature but it was not successful. Any insights? Since the matrix is not symmetric and each rows sums to 1, I am confused. Thanks a lot !

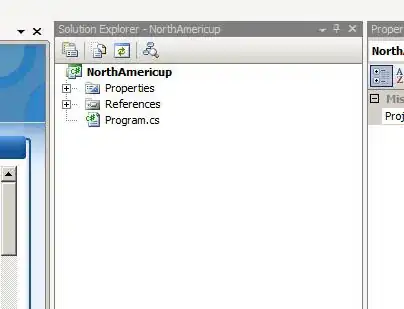

tokenizer = BertTokenizer.from_pretrained('Rostlab/prot_bert')

inputs = tokenizer(input_text, return_tensors='pt')

attention_mask=inputs['attention_mask']

outputs = model(inputs['input_ids'],attention_mask) #len 30 as the model layers #outpus.attentions

attention = outputs[-1]

attention = attention[-1] #last layer attention

layer_attention = layer_attention[-1] #last head attention

#... code to read it as a matrix with token labels

(

(