import discord

import openai

import os

openai.api_key = os.environ.get("OPENAI_API_KEY")

#Specify the intent

intents = discord.Intents.default()

intents.members = True

#Create Client

client = discord.Client(intents=intents)

async def generate_response(message):

prompt = f"{message.author.name}: {message.content}\nAI:"

response = openai.Completion.create(

engine="gpt-3.5-turbo",

prompt=prompt,

max_tokens=1024,

n=1,

stop=None,

temperature=0.5,

)

return response.choices[0].text.strip()

@client.event

async def on_ready():

print(f"We have logged in as {client.user}")

@client.event

async def on_message(message):

if message.author == client.user:

return

response = await generate_response(message)

await message.channel.send(response)

discord_token = 'DiscordToken'

client.start(discord_token)

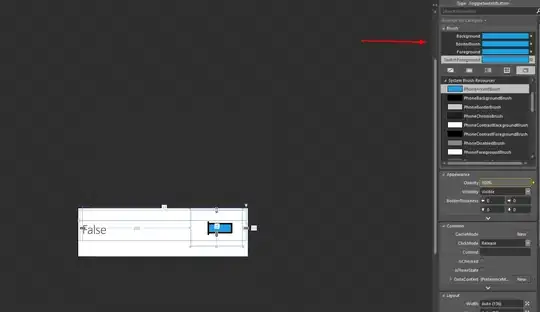

I try to use diferent way to access the API key, including adding to enviroment variables.

What else can I try or where I'm going wrong, pretty new to programming. Error message:

openai.error.AuthenticationError: No API key provided. You can set your API key in code using 'openai.api_key = ', or you can set the environment variable OPENAI_API_KEY=). If your API key is stored in a file, you can point the openai module at it with 'openai.api_key_path = '. You can generate API keys in the OpenAI web interface. See https://onboard.openai.com for details, or email support@openai.com if you have any questions.

EDIT

I solved "No API key provided" error. Now I get the following error message:

openai.error.InvalidRequestError: This is a chat model and not supported in the v1/completions endpoint. Did you mean to use v1/chat/completions?