I am trying to break a large dataframe (7 million records) into multiple csv file of 800k each. Below is the complete code

from pyspark.sql import SparkSession

options = {

"pathGlobalFilter": "*.csv",

"header": "True",

}

spark = SparkSession.builder.config("spark.driver.host","localhost").appName("CSV Reader").getOrCreate()

csv_path = "C:\\Users\\rajat.kapoor\\Desktop\\155_Lacs_Raw_Data\\OutputFiles_CSV"

RawData_Combined_Revolt_Only = spark.read.format("csv").options(**options).load(csv_path)

# Import necessary libraries

from pyspark.sql.functions import monotonically_increasing_id

# Define the chunk size

chunk_size = 800000

# Add a unique ID column to the dataframe

RawData_Combined_Revolt_Only = RawData_Combined_Revolt_Only.withColumn("id", monotonically_increasing_id())

# Repartition the dataframe based on the chunk size

RawData_Combined_Revolt_Only = RawData_Combined_Revolt_Only.repartition((RawData_Combined_Revolt_Only.count() / chunk_size) + 1)

# Write each partition to a separate CSV file

RawData_Combined_Revolt_Only.write.csv("C:\\Users\\rajat.kapoor\\Desktop\\Output PySpark Folder", header=True, mode="overwrite")

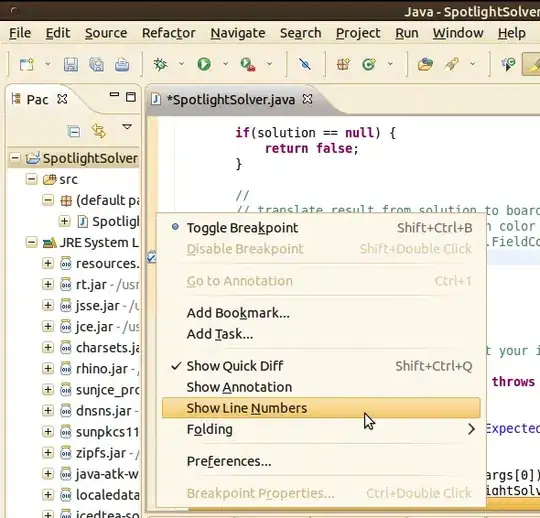

It is giving the below error

Py4JJavaError

with the main error in the image shown below