I use Hasura as graphql server as docker container. Also I use aiohttp to request hasura from another docker container and get a very slow RPS and actually hasura query execution time is big also.

But when I made a stress test hasura using locust I got a pretty good performance.

Now I'm a little confused, why there is so big differense in performance?

async def execute_query(session, N):

query = """

query my_query {...

}

"""

async with session.post('http://hasura:8080/v1/graphql/', json=payload, ssl=False) as resp:

data = await resp.json()

if not data.get('data'):

print(f"No data {data}")

return data

async def send_requests(N: int):

async with aiohttp.ClientSession(

connector=aiohttp.TCPConnector(limit=90000),

headers={'x-hasura-admin-secret': 'my-admin-secret'},

) as session:

return await asyncio.gather(*[

execute_query(session, N) for i in range(N)

])

def test_rpc(N):

start_timestamp = time.time()

asyncio.run(send_requests(N))

task_time = round(time.time() - start_timestamp, 2)

rps = round(N / task_time, 1)

print(

f"Requests: {N}; Total time: {task_time} s; RPS: {rps}."

)

if __name__ == '__main__':

test_rpc(100)

test_rpc(500)

test_rpc(1000)

test_rpc(2000)

test_rpc(5000)

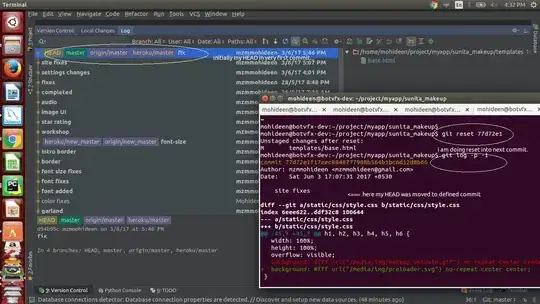

Using aiohttp:

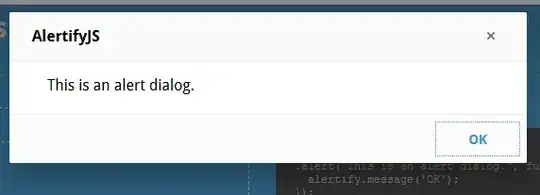

Using locust: