I am working on a task to extract some information (in HINDI) from a pdf file and convert it into a data frame.

I have tried many things and followed many articles, and answers on stack overflow as well. I tried different libraries like easy OCR, paddle OCR, and others but was unable to get the correct output.

Here is the link for the document. Link

Things I have tried:

- How to improve Hindi text extraction?

- How do I display the contours of an image using OpenCV Python?

- https://amannair723.medium.com/pdf-to-excel-using-advance-python-nlp-and-computer-vision-aka-document-ai-23cc0fb56549

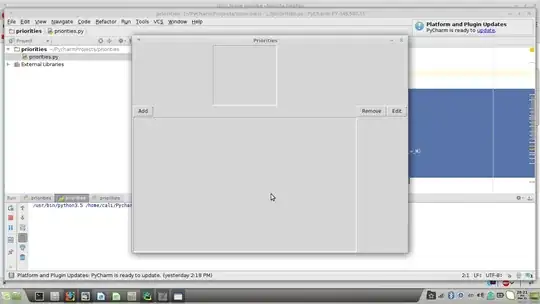

It seems that I am unable to get the exact contours to create the bounding box. Below you can see the image of the output I am getting.

All I need is to convert this information to a data frame where the columns would be:- नाम: पति का नाम / पिता का नाम: मकान संख्याः an so on.

Below is the code I am using to get data:-

import cv2

import pytesseract

import numpy as np

from pytesseract import Output

image = cv2.imread('pages_new/page3.jpg')

img = image.copy()

mask = np.zeros(image.shape, dtype=np.uint8)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU)[1]

# Filter for ROI using contour area and aspect ratio

countour = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

countour = countour[0] if len(countour) == 2 else countour[1]

for c in countour:

area = cv2.contourArea(c)

peri = cv2.arcLength(c, True)

approx = cv2.approxPolyDP(c, 0.05 * peri, True)

x,y,w,h = cv2.boundingRect(approx)

aspect_ratio = w / float(h)

if area > 10000 and aspect_ratio > .5:

mask[y:y+h, x:x+w] = image[y:y+h, x:x+w]

h, w, c = img.shape

boxes = pytesseract.image_to_boxes(img)

for b in boxes.splitlines():

b = b.split(' ')

img = cv2.rectangle(img, (int(b[1]), h - int(b[2])), (int(b[3]), h - int(b[4])), (0, 255, 0), 2)

d = pytesseract.image_to_data(img, output_type=Output.DICT)

n_boxes = len(d['text'])

for i in range(n_boxes):

if int(d['conf'][i]) > 60:

(x, y, w, h) = (d['left'][i], d['top'][i], d['width'][i], d['height'][i])

img2 = cv2.rectangle(img, (x, y), (x + w, y + h), (0, 255, 0), 2)

# cv2.imshow('img', img)

# cv2.imshow('img2', img2)

# Perfrom OCR with Pytesseract

data = pytesseract.image_to_string(mask, lang='Devanagari', config='--psm 6')

print(data)

# cv2.imshow('thresh', thresh)

# cv2.imshow('mask', mask)

Also, Could anyone please confirm if the information on the page changes, Do we have to write a different code for all the documents or we can get a generic script for all the docs?