Currently there seems to be lots of confusion due to a variety of pro-privacy features introduced in latest RFCs aiming at hiding away peers' IPs but also due to the logic responsible for optimizing redundant information produced through WebRTC client APIs.

So the question is simple: should a client which is NOT behind a NAT produce a local SRFLX candidate when communicating with a STUN server? or would it be optimized away since the same public IP was already found to be present within of a HOST candidate ?

Notice that I do get the public IP within a HOST candidate which just confirms that the computer in question is not behind a NAT.

The closest thread I found matching this situation is over here WebRTC does not work in the modern browsers when a peer is not behind a NAT because of the obfuscating host address

Over there, it is considered a bug in chromium which supposedly was fixed, but then I am facing a situation with the most recent build where SRFLX candidates are not being generated and there is no obstruction at the UDP layer. Once behind a NAT I do receive a SRFLX candidate.

Testing with Trickle ICE test page. Running Chrome 112.0.5615.50 on Windows 11.

I decided to dive deep into this problem and I'm facing very peculiar situation.

I mean there are two popular test-pages in the wild.

- One is https://webrtc.github.io/samples/src/content/peerconnection/trickle-ice/

- while the second: https://icetest.info/

Now, to all my surprise, I am not getting coherent results with the two test-pages.

2 produces local SRFLX candidates right away while 1 does not produce SRFLX but produces lots of HOST candidates instead.

Now the question is how come.

Both these pages are to be considered state of the art. These are running in separate tabs, on same computer, in same browser which is broadly open to the Internet.

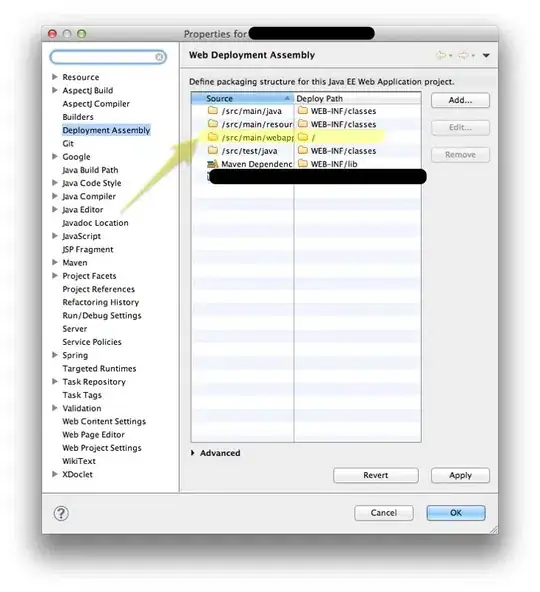

So, I fired up Chromium's Web-RTC internals.

For the life of me I cannot see differences to invocations of APIs - as these are reported.

All I can see is that 2 is faced with the following onicecandidateerror almost right away:

url: stun:stun.l.google.com:19302

address: [0:0:0:x:x:x:x:x]

port: 57752

host_candidate: [0:0:0:x:x:x:x:x]:57752

error_text: STUN host lookup received error.

error_code: 701

Notice that the error does not prevent the Chromium's client side API engine from generating a SRFLX candidate.

while 1 is not facing any errors for >10 seconds after which it is faced with:

url: stun:stun.l.google.com:19302

address: 169.254.50.x

port: 52966

host_candidate: 169.254.50.x:52966

error_text: STUN binding request timed out.

error_code: 701

I include dumps from data-exchange with both 1 and 2 I cannot find any discrepancies between API invocations between the two. Still looking..

Here go events for 2:

which is followed by ICE candidates and the mentioned error:

Now for the 1 (Trickle ICE test-page):

Kindly do notice the discrepancies mainly in the two entirely distinct types of (>700 - meaning client side) errors, and the timing at which these occur (same browser, same computer, same Google's STUN server, same time) and the magnitude of possible connectivity implications.

Update: I have tripple checked all the lines reported by Chromium's WebRTC Internal dev pane. I could not spot ANY discrepancies in terms of invoked APIs and / or parameters passed to these. After all - it's all about creating a local offer and setting local description. Yet still, I cannot spot any differences in parameters passed into these functions (including internal reported callbacks) all both create-offers are only after audio and that's it. No exotics explicit parameters set. Defaults.

The following createOfferOnSuccess() invocations seem to contain IDENTICAL data (only but for sequence numbers and ports). Same holds for data passed into setLocalDescription(). Even the transceiver gets modified in the very same exact way. On both 1 and 2.

And yet, the following errors and resulting ICE candidates differ by a lot. Now I am extremely curious why that might be.

Why would 2 be immediately faced with "STUN host lookup received error." even though the URL is perfectly fine (as reported by WebRTC Internals on screenshots above) and resolved well in the other tab? Why would it manage to produce SRFLX ICE candidates while the other tab keeps generating HOST candidates containing public IPs and never coming up with the actual SRFLX candidate which the remote peer surely expects?

I went after looking into the details and differences in between of the generated ICE candidates.

For 1, there is no SRFLX local candidate BUT still there is the following HOST candidate:

So it turns out, the STUN server has no trouble communicating with local node through UDP. Fingers crossed. Then why no SRFLX? Then what is the point of having SRFLX datagram type at all? If the remote peer can possibly cope with a HOST candidate alone, can it?

Let us now take a look at the SRFLX candidate produced by 2:

Same Public IP address detected, similar port.

I've also checked the initial parameters passed to create-connection:

{ iceServers: [stun:stun.l.google.com:19302], iceTransportPolicy: all, bundlePolicy: balanced, rtcpMuxPolicy: require, iceCandidatePoolSize: 0 }

Same in both cases.

I've went into lengths of checking whether my system assigns different Integrity Labels (MS security construct) to these tabs. Nope - both are marked as untrusted.

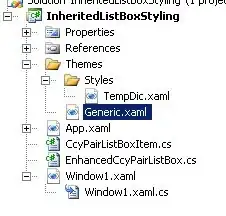

That's the code behind 1:

async function start() {

// Clean out the table.

while (candidateTBody.firstChild) {

candidateTBody.removeChild(candidateTBody.firstChild);

}

gatherButton.disabled = true;

if (getUserMediaInput.checked) {

stream = await navigator.mediaDevices.getUserMedia({audio: true});

}

getUserMediaInput.disabled = true;

// Read the values from the input boxes.

const iceServers = [];

for (let i = 0; i < servers.length; ++i) {

iceServers.push(JSON.parse(servers[i].value));

}

const transports = document.getElementsByName('transports');

let iceTransports;

for (let i = 0; i < transports.length; ++i) {

if (transports[i].checked) {

iceTransports = transports[i].value;

break;

}

}

// Create a PeerConnection with no streams, but force a m=audio line.

const config = {

iceServers: iceServers,

iceTransportPolicy: iceTransports,

};

const offerOptions = {offerToReceiveAudio: 1};

// Whether we gather IPv6 candidates.

// Whether we only gather a single set of candidates for RTP and RTCP.

console.log(`Creating new PeerConnection with config=${JSON.stringify(config)}`);

const errDiv = document.getElementById('error');

errDiv.innerText = '';

let desc;

try {

pc = new RTCPeerConnection(config);

pc.onicecandidate = iceCallback;

pc.onicegatheringstatechange = gatheringStateChange;

pc.onicecandidateerror = iceCandidateError;

if (stream) {

stream.getTracks().forEach(track => pc.addTrack(track, stream));

}

desc = await pc.createOffer(offerOptions);

} catch (err) {

errDiv.innerText = `Error creating offer: ${err}`;

gatherButton.disabled = false;

return;

}

begin = window.performance.now();

candidates = [];

pc.setLocalDescription(desc);

}

And That's the code behind 2:

start() {

this.startTime = Date.now()

this.pc = new RTCPeerConnection({iceServers: this.iceServers})

this.pc.onicegatheringstatechange = (e) => {

if (this.pc.iceGatheringState === 'complete') {

this.endTime = Date.now()

}

this.emit('icegatheringstatechange', this.pc.iceGatheringState)

}

this.pc.onicecandidate = (event) => {

if (event.candidate) {

this.candidates.push({time: Date.now(), candidate: event.candidate})

this.emit('icecandidate', event.candidate)

}

}

this.pc.createOffer({offerToReceiveAudio: true}).then((desc) => {

return this.pc.setLocalDescription(desc)

}).catch((error) => {

console.error(error)

reject(error)

})

}

stop() {

this.pc.onicegatheringstatechange = null

this.pc.onicecandidate = null

this.pc.close()

this.pc = undefined

this.candidates = undefined

}

So all in all this boils down to a couple of things:

- above all - what causes the discrepancies?

- which tab is in a better situation? In the end, had we been passing the generated ICE candidates over a signaling channel to the other peer - which does not exist in our case, we would have one application 1 sending over lots of host candidates (to the other peer) while the other app 2 would be dispatching the SRFLX ice candidate. Which one is in a better position to maintain a successful connection? Personally I thought SRFLX is required to assume a STUN service as operational to begin with. HOST candidates are good for same-network communication. That's the assumption we rely on.

- anyhow, our code-base ends up in the very same spot the Trickle ICE test-page does. We do Perfect Negotiation paradigm, in accordance to latest RFCs and or/suggestions - and do we do not get a SRFLX local candidate in the aforementioned networking configuration. When interacting with any Google STUN server.

- why does 2 get a SRFLX local candidate? Then, why is it faced with what seems as a DNS-related error - at the head-start?

- if local RTC sub-system can generate HOST candidates with a public IP address of a node then what's the point for having a dedicated SRFLX ICE candidate?

UPDATE: Well folks. I've spent considerable amount of time digging deep into this. I went into the lengths of replicating or LIVE-altering code executed in the scope of 1 to mimic code executed in scope of domain 2 and vice-versa (live-altering code in Google Dev Console). Guess what? The results were not coherent. First I noticed that 2 was not passing credentials when quiring for STUN service and thought to myself BINGO! (why would one require credentials just to query for an IP.. besides I recall there used to be a bug around this years ago..) But I was wrong. No luck. I deliberately deleted

delete iceServers[0].username;

delete iceServers[0].credential;

these parameters from the final configuration passed into RTCPeerConnection. And still one implementation keeps receiving SRFLX candidates while the other is stuck with HOST candidates only.

Seeing this I begin to run into 'crazy' ideas such as Chrome treating different domains differently, because come on, what are the other options? There are just a few lines of code involved!

Here's this to be seen on YouTube: https://youtu.be/6NHiFVhiQtk