I'm trying to understand why increasing the number of threads (after a certain number) increases the CPU time instead of decreasing it.

A summary of what the code does: I have a main which create a large vector based on a dimension. I fill it with indexes (0..dimension-1) and then shuffle it. Then, in order to divide-and-conquer, I partition this vector giving a slice to each thread. I prepare a vector of vector of solutions, to give each entry to the threads. Each thread calls a function on each element of its slice and passing the reference to its prepared solution. This function just change the value of the solution at the given index in input, and then it just increases all the elements in the solution (I put this to increasing a bit the comp. time). Here the code:

#include <algorithm>

#include <random>

#include <thread>

#include <iostream>

#include <chrono>

#include <vector>

#include <numeric>

using namespace std;

template<typename T>

inline double getMs(T start, T end) {

return double(

std::chrono::duration_cast<std::chrono::milliseconds>(end - start)

.count()) /

1000;

}

void fun(const std::vector<int>& slice, int dimension,

vector<int>& internal_sol) {

int size = dimension;

for (auto const& s : slice) {

internal_sol[s] = 45;

internal_sol[int(s/2)] = 45;

if (s != 0) {

internal_sol[s - 1] = 45;

}

if (s != internal_sol.size() - 1) {

internal_sol[s + 1] = 45;

}

for (auto& i_s : internal_sol)

i_s += 1;

}

}

int main(int) {

std::random_device rd;

std::mt19937 g(rd());

unsigned int n = std::thread::hardware_concurrency();

std::cout << n << " concurrent threads are supported.\n";

std::srand(unsigned(std::time(nullptr)));

int number_stops = 50000;

int number_transfers = 3;

int number_structures = 2;

auto dimension = number_stops * (number_transfers + 1) * number_structures;

std::vector<int> v(dimension);

std::iota(std::begin(v), std::end(v), 0);

std::shuffle(std::begin(v), std::end(v), g);

printf("stops:\t%d\ntransfers:\t%d\nstructures:\t%d\n\n", number_stops, number_transfers, number_structures);

for (size_t np = 2; np < 17; np++) {

size_t sz = dimension;

size_t part = sz / np;

auto start = std::chrono::steady_clock::now();

std::cout << np << " threads: ";

std::vector<std::thread> threads(np);

auto start_ext_sol = std::chrono::steady_clock::now();

vector<vector<int>> external_sol; //As TAUs from velociraptor

for (size_t i = 0; i < np; i++) {

external_sol.emplace_back(dimension, -1);

}

double elapsed_ext_sol = getMs(start_ext_sol, std::chrono::steady_clock::now());

auto paraTask = [&](size_t start, size_t end, vector<int>& ext_sol) {

for (size_t l = start; l < end; ++l)

fun({v[l]}, dimension, ext_sol);

for (int i = 0;i < ext_sol.size(); i++)

ext_sol[i] = -1;

};

for (size_t i = 0; i < np; i++) {

size_t start = i * part;

size_t length = (i + 1 == np) ? sz - i * part : part;

threads[i] =

std::thread(paraTask, start, start + length, std::ref(external_sol[i]));

}

// Join the threads

for (auto&& thread : threads) thread.join();

double elapsed = getMs(start, std::chrono::steady_clock::now());

printf("parallel completed: %.3f sec. preparing all vectors ext_sols: %.3f sec\n",

elapsed, elapsed_ext_sol);

}

return 0;

}

The result obtained is:

16 concurrent threads are supported.

stops: 50000

transfers: 3

structures: 2

2 threads: parallel completed: 6.776 sec. preparing all vectors ext_sols: 0.000 sec

3 threads: parallel completed: 5.711 sec. preparing all vectors ext_sols: 0.004 sec

4 threads: parallel completed: 5.285 sec. preparing all vectors ext_sols: 0.003 sec

5 threads: parallel completed: 4.892 sec. preparing all vectors ext_sols: 0.001 sec

6 threads: parallel completed: 4.614 sec. preparing all vectors ext_sols: 0.008 sec

7 threads: parallel completed: 4.726 sec. preparing all vectors ext_sols: 0.001 sec

8 threads: parallel completed: 4.281 sec. preparing all vectors ext_sols: 0.004 sec

9 threads: parallel completed: 4.815 sec. preparing all vectors ext_sols: 0.007 sec

10 threads: parallel completed: 4.791 sec. preparing all vectors ext_sols: 0.008 sec

11 threads: parallel completed: 5.594 sec. preparing all vectors ext_sols: 0.002 sec

12 threads: parallel completed: 5.411 sec. preparing all vectors ext_sols: 0.012 sec

13 threads: parallel completed: 5.213 sec. preparing all vectors ext_sols: 0.010 sec

14 threads: parallel completed: 6.159 sec. preparing all vectors ext_sols: 0.005 sec

15 threads: parallel completed: 8.353 sec. preparing all vectors ext_sols: 0.006 sec

16 threads: parallel completed: 6.769 sec. preparing all vectors ext_sols: 0.012 sec

As you can see the time increases. I don't see why this happens. The dimension here is 450.000 integers for each threads, therefore around 1.7 MB (counting 4 bytes for each integer). Thus, we don't go over the dimension of the L1 cache, in my understanding. What is happening?

Here some details about my machine:

- CPU - 11th Gen Intel(R) Core(TM) i7-11700KF @ 3.60GHz

- RAM - 16 GB DDR4

- Windows 11 Compiler - MS_VS 2022

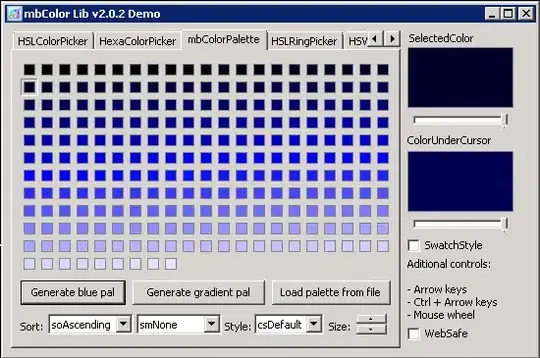

And here the memory hardware report from CPU-Z

PS: I also tried removing the lopp

for (auto& i_s : internal_sol)

i_s += 1

from the function, but the same happens (of course with lower timings)