I am using Parallel.For to start a large number of jobs (say 1000). This works well, however each job is also quite memory intensive, and from what I can tell Prallel For starts a much higher number of parallel jobs that I would expect.

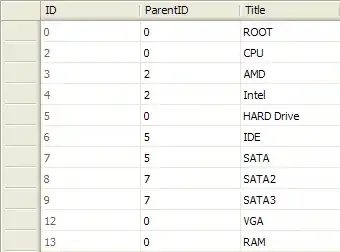

Running on old home my dev box with 4 cores, I see 400+ ongoing jobs:

This might be fine, however each of these jobs is running a relatively memory intensive algorithm. Therefore the memory usage of the program is high, and I suspect the performance is now impeded due to memory swapping

Currently I am not using any ParallelOptions, just running with the defaults. But I am wondering if I should be adjusting MaxDegreeOfParallelism to keep memory usage from exploding. Or am I overthinking this, and Parallel already takes something way smarter in account?