I have a simple Spark application in Scala 2.12.

My App

find-retired-people-scala/project/build.properties

sbt.version=1.8.2

find-retired-people-scala/src/main/scala/com/hongbomiao/FindRetiredPeople.scala

package com.hongbomiao

import org.apache.spark.sql.{DataFrame, SparkSession}

object FindRetiredPeople {

def main(args: Array[String]): Unit = {

val people = Seq(

(1, "Alice", 25),

(2, "Bob", 30),

(3, "Charlie", 80),

(4, "Dave", 40),

(5, "Eve", 45)

)

val spark: SparkSession = SparkSession.builder()

.master("local[*]")

.appName("find-retired-people-scala")

.config("spark.ui.port", "4040")

.getOrCreate()

import spark.implicits._

val df: DataFrame = people.toDF("id", "name", "age")

df.createOrReplaceTempView("people")

val retiredPeople: DataFrame = spark.sql("SELECT name, age FROM people WHERE age >= 67")

retiredPeople.show()

spark.stop()

}

}

find-retired-people-scala/build.sbt

name := "FindRetiredPeople"

version := "1.0"

scalaVersion := "2.12.17"

libraryDependencies ++= Seq(

"org.apache.spark" %% "spark-core" % "3.4.0",

"org.apache.spark" %% "spark-sql" % "3.4.0",

)

find-retired-people-scala/.jvmopts (Only add this file for Java 17, for other old versions, remove it)

--add-exports=java.base/sun.nio.ch=ALL-UNNAMED

Issue

When I run sbt run during local development for testing and debugging purpose, the app succeed running.

However, I still got a harmless error:

➜ sbt run

[info] welcome to sbt 1.8.2 (Homebrew Java 17.0.7)

# ...

+-------+---+

| name|age|

+-------+---+

|Charlie| 80|

+-------+---+

23/04/24 13:56:40 INFO SparkUI: Stopped Spark web UI at http://10.10.8.125:4040

23/04/24 13:56:40 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

23/04/24 13:56:40 INFO MemoryStore: MemoryStore cleared

23/04/24 13:56:40 INFO BlockManager: BlockManager stopped

23/04/24 13:56:40 INFO BlockManagerMaster: BlockManagerMaster stopped

23/04/24 13:56:40 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

23/04/24 13:56:40 INFO SparkContext: Successfully stopped SparkContext

[success] Total time: 8 s, completed Apr 24, 2023, 1:56:40 PM

Exception in thread "Thread-1" java.lang.RuntimeException: java.nio.file.NoSuchFileException: find-retired-people-scala/target/bg-jobs/sbt_e60ef8a3/target/3d275f27/dbc63e3b/hadoop-client-api-3.3.2.jar

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3089)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:3036)

at org.apache.hadoop.conf.Configuration.loadProps(Configuration.java:2914)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2896)

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1246)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1863)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1840)

at org.apache.hadoop.util.ShutdownHookManager.getShutdownTimeout(ShutdownHookManager.java:183)

at org.apache.hadoop.util.ShutdownHookManager.shutdownExecutor(ShutdownHookManager.java:145)

at org.apache.hadoop.util.ShutdownHookManager.access$300(ShutdownHookManager.java:65)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:102)

Caused by: java.nio.file.NoSuchFileException: find-retired-people-scala/target/bg-jobs/sbt_e60ef8a3/target/3d275f27/dbc63e3b/hadoop-client-api-3.3.2.jar

at java.base/sun.nio.fs.UnixException.translateToIOException(UnixException.java:92)

at java.base/sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:106)

at java.base/sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:111)

at java.base/sun.nio.fs.UnixFileAttributeViews$Basic.readAttributes(UnixFileAttributeViews.java:55)

at java.base/sun.nio.fs.UnixFileSystemProvider.readAttributes(UnixFileSystemProvider.java:148)

at java.base/java.nio.file.Files.readAttributes(Files.java:1851)

at java.base/java.util.zip.ZipFile$Source.get(ZipFile.java:1264)

at java.base/java.util.zip.ZipFile$CleanableResource.<init>(ZipFile.java:709)

at java.base/java.util.zip.ZipFile.<init>(ZipFile.java:243)

at java.base/java.util.zip.ZipFile.<init>(ZipFile.java:172)

at java.base/java.util.jar.JarFile.<init>(JarFile.java:347)

at java.base/sun.net.www.protocol.jar.URLJarFile.<init>(URLJarFile.java:103)

at java.base/sun.net.www.protocol.jar.URLJarFile.getJarFile(URLJarFile.java:72)

at java.base/sun.net.www.protocol.jar.JarFileFactory.get(JarFileFactory.java:168)

at java.base/sun.net.www.protocol.jar.JarFileFactory.getOrCreate(JarFileFactory.java:91)

at java.base/sun.net.www.protocol.jar.JarURLConnection.connect(JarURLConnection.java:132)

at java.base/sun.net.www.protocol.jar.JarURLConnection.getInputStream(JarURLConnection.java:175)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:3009)

at org.apache.hadoop.conf.Configuration.getStreamReader(Configuration.java:3105)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3063)

... 10 more

Process finished with exit code 0

My question

My question is how to hide this harmless error?

My attempt

Based on this, I tried to add hadoop-client by updating build.sbt:

name := "FindRetiredPeople"

version := "1.0"

scalaVersion := "2.12.17"

libraryDependencies ++= Seq(

"org.apache.spark" %% "spark-core" % "3.4.0",

"org.apache.spark" %% "spark-sql" % "3.4.0",

"org.apache.hadoop" %% "hadoop-client" % "3.3.4",

)

However, then my error becomes

sbt run

[info] welcome to sbt 1.8.2 (Homebrew Java 17.0.6)

[info] loading project definition from hongbomiao.com/hm-spark/applications/find-retired-people-scala/project

| => find-retired-people-scala-build / Compile / compileIncremental 0s

[info] loading settings for project find-retired-people-scala from build.sbt ...

[info] set current project to FindRetiredPeople (in build file:hongbomiao.com/hm-spark/applications/find-retired-people-scala/)

| => find-retired-people-scala / update 0s

[info] Updating

| => find-retired-people-scala / update 0s

https://repo1.maven.org/maven2/org/apache/hadoop/hadoop-client_2.12/3.3.4/hadoo…

0.0% [ ] 0B (0B / s)

| => find-retired-people-scala / update 0s

https://repo1.maven.org/maven2/org/apache/hadoop/hadoop-client_2.12/3.3.4/hadoo…

0.0% [ ] 0B (0B / s)

[info] Resolved dependencies

| => find-retired-people-scala / update 0s

[warn]

| => find-retired-people-scala / update 0s

[warn] Note: Unresolved dependencies path:

| => find-retired-people-scala / update 0s

[error] sbt.librarymanagement.ResolveException: Error downloading org.apache.hadoop:hadoop-client_2.12:3.3.4

[error] Not found

[error] Not found

[error] not found: /Users/hongbo-miao/.ivy2/localorg.apache.hadoop/hadoop-client_2.12/3.3.4/ivys/ivy.xml

[error] not found: https://repo1.maven.org/maven2/org/apache/hadoop/hadoop-client_2.12/3.3.4/hadoop-client_2.12-3.3.4.pom

[error] at lmcoursier.CoursierDependencyResolution.unresolvedWarningOrThrow(CoursierDependencyResolution.scala:344)

[error] at lmcoursier.CoursierDependencyResolution.$anonfun$update$38(CoursierDependencyResolution.scala:313)

[error] at scala.util.Either$LeftProjection.map(Either.scala:573)

[error] at lmcoursier.CoursierDependencyResolution.update(CoursierDependencyResolution.scala:313)

[error] at sbt.librarymanagement.DependencyResolution.update(DependencyResolution.scala:60)

[error] at sbt.internal.LibraryManagement$.resolve$1(LibraryManagement.scala:59)

[error] at sbt.internal.LibraryManagement$.$anonfun$cachedUpdate$12(LibraryManagement.scala:133)

[error] at sbt.util.Tracked$.$anonfun$lastOutput$1(Tracked.scala:73)

[error] at sbt.internal.LibraryManagement$.$anonfun$cachedUpdate$20(LibraryManagement.scala:146)

[error] at scala.util.control.Exception$Catch.apply(Exception.scala:228)

[error] at sbt.internal.LibraryManagement$.$anonfun$cachedUpdate$11(LibraryManagement.scala:146)

[error] at sbt.internal.LibraryManagement$.$anonfun$cachedUpdate$11$adapted(LibraryManagement.scala:127)

[error] at sbt.util.Tracked$.$anonfun$inputChangedW$1(Tracked.scala:219)

[error] at sbt.internal.LibraryManagement$.cachedUpdate(LibraryManagement.scala:160)

[error] at sbt.Classpaths$.$anonfun$updateTask0$1(Defaults.scala:3687)

[error] at scala.Function1.$anonfun$compose$1(Function1.scala:49)

[error] at sbt.internal.util.$tilde$greater.$anonfun$$u2219$1(TypeFunctions.scala:62)

[error] at sbt.std.Transform$$anon$4.work(Transform.scala:68)

[error] at sbt.Execute.$anonfun$submit$2(Execute.scala:282)

[error] at sbt.internal.util.ErrorHandling$.wideConvert(ErrorHandling.scala:23)

[error] at sbt.Execute.work(Execute.scala:291)

[error] at sbt.Execute.$anonfun$submit$1(Execute.scala:282)

[error] at sbt.ConcurrentRestrictions$$anon$4.$anonfun$submitValid$1(ConcurrentRestrictions.scala:265)

[error] at sbt.CompletionService$$anon$2.call(CompletionService.scala:64)

[error] at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

[error] at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:539)

[error] at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

[error] at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

[error] at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

[error] at java.base/java.lang.Thread.run(Thread.java:833)

[error] (update) sbt.librarymanagement.ResolveException: Error downloading org.apache.hadoop:hadoop-client_2.12:3.3.4

[error] Not found

[error] Not found

[error] not found: /Users/hongbo-miao/.ivy2/localorg.apache.hadoop/hadoop-client_2.12/3.3.4/ivys/ivy.xml

[error] not found: https://repo1.maven.org/maven2/org/apache/hadoop/hadoop-client_2.12/3.3.4/hadoop-client_2.12-3.3.4.pom

[error] Total time: 2 s, completed Apr 19, 2023, 4:52:07 PM

make: *** [sbt-run] Error 1

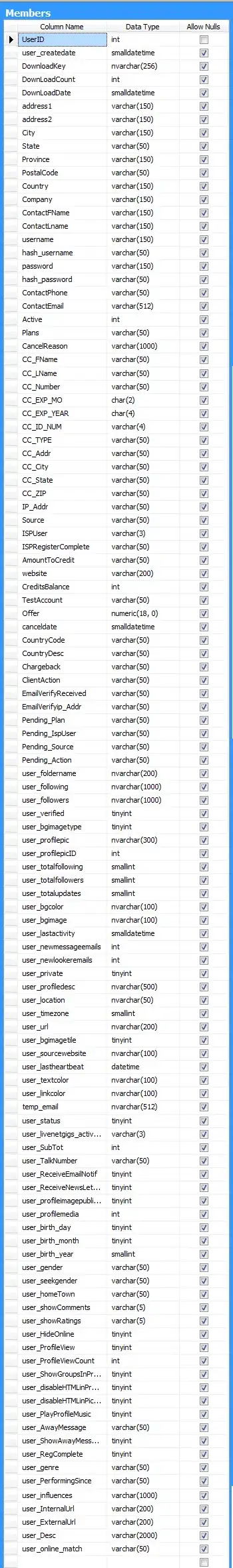

Note in the log, it expects hadoop-client_2.12-3.3.4.pom. I think 2.12 means Scala 2.12. However, hadoop-client does not have Scala 2.12 version.

To compare, this is how it looks for libraries having Scala version such as spark-sql:

Anything I can do to help resolve the issue? Thanks!

UPDATE 1 (4/19/2023):

I updated from "org.apache.hadoop" %% "hadoop-client" % "3.3.4" to "org.apache.hadoop" % "hadoop-client" % "3.3.4" in build.sbt based on Dmytro Mitin's answer, so now it looks like

name := "FindRetiredPeople"

version := "1.0"

scalaVersion := "2.12.17"

libraryDependencies ++= Seq(

"org.apache.spark" %% "spark-core" % "3.4.0",

"org.apache.spark" %% "spark-sql" % "3.4.0",

"org.apache.hadoop" % "hadoop-client" % "3.3.4"

)

Now the error becomes:

➜ sbt run

[info] welcome to sbt 1.8.2 (Amazon.com Inc. Java 17.0.6)

[info] loading project definition from find-retired-people-scala/project

[info] loading settings for project find-retired-people-scala from build.sbt ...

[info] set current project to FindRetiredPeople (in build file:find-retired-people-scala/)

[info] running com.hongbomiao.FindRetiredPeople

Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties

23/04/19 19:28:46 INFO SparkContext: Running Spark version 3.4.0

23/04/19 19:28:46 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

# ...

+-------+---+

| name|age|

+-------+---+

|Charlie| 80|

+-------+---+

23/04/19 19:28:48 INFO SparkContext: SparkContext is stopping with exitCode 0.

23/04/19 19:28:48 INFO SparkUI: Stopped Spark web UI at http://10.0.0.135:4040

23/04/19 19:28:48 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

23/04/19 19:28:48 INFO MemoryStore: MemoryStore cleared

23/04/19 19:28:48 INFO BlockManager: BlockManager stopped

23/04/19 19:28:48 INFO BlockManagerMaster: BlockManagerMaster stopped

23/04/19 19:28:48 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

23/04/19 19:28:48 INFO SparkContext: Successfully stopped SparkContext

[success] Total time: 7 s, completed Apr 19, 2023, 7:28:48 PM

23/04/19 19:28:49 INFO ShutdownHookManager: Shutdown hook called

23/04/19 19:28:49 INFO ShutdownHookManager: Deleting directory /private/var/folders/22/ntjwd5dx691gvkktkspl0f_00000gq/T/spark-6cd93a01-3109-4ecd-aca2-a21a9921ecf8

23/04/19 19:28:49 ERROR Configuration: error parsing conf core-default.xml

java.nio.file.NoSuchFileException: find-retired-people-scala/target/bg-jobs/sbt_20affd86/target/f5c922ec/359669fc/hadoop-client-api-3.3.4.jar

at java.base/sun.nio.fs.UnixException.translateToIOException(UnixException.java:92)

at java.base/sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:106)

at java.base/sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:111)

at java.base/sun.nio.fs.UnixFileAttributeViews$Basic.readAttributes(UnixFileAttributeViews.java:55)

at java.base/sun.nio.fs.UnixFileSystemProvider.readAttributes(UnixFileSystemProvider.java:148)

at java.base/java.nio.file.Files.readAttributes(Files.java:1851)

at java.base/java.util.zip.ZipFile$Source.get(ZipFile.java:1264)

at java.base/java.util.zip.ZipFile$CleanableResource.<init>(ZipFile.java:709)

at java.base/java.util.zip.ZipFile.<init>(ZipFile.java:243)

at java.base/java.util.zip.ZipFile.<init>(ZipFile.java:172)

at java.base/java.util.jar.JarFile.<init>(JarFile.java:347)

at java.base/sun.net.www.protocol.jar.URLJarFile.<init>(URLJarFile.java:103)

at java.base/sun.net.www.protocol.jar.URLJarFile.getJarFile(URLJarFile.java:72)

at java.base/sun.net.www.protocol.jar.JarFileFactory.get(JarFileFactory.java:168)

at java.base/sun.net.www.protocol.jar.JarFileFactory.getOrCreate(JarFileFactory.java:91)

at java.base/sun.net.www.protocol.jar.JarURLConnection.connect(JarURLConnection.java:132)

at java.base/sun.net.www.protocol.jar.JarURLConnection.getInputStream(JarURLConnection.java:175)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:3009)

at org.apache.hadoop.conf.Configuration.getStreamReader(Configuration.java:3105)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3063)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:3036)

at org.apache.hadoop.conf.Configuration.loadProps(Configuration.java:2914)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2896)

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1246)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1863)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1840)

at org.apache.hadoop.util.ShutdownHookManager.getShutdownTimeout(ShutdownHookManager.java:183)

at org.apache.hadoop.util.ShutdownHookManager.shutdownExecutor(ShutdownHookManager.java:145)

at org.apache.hadoop.util.ShutdownHookManager.access$300(ShutdownHookManager.java:65)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:102)

Exception in thread "Thread-1" java.lang.RuntimeException: java.nio.file.NoSuchFileException: find-retired-people-scala/target/bg-jobs/sbt_20affd86/target/f5c922ec/359669fc/hadoop-client-api-3.3.4.jar

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3089)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:3036)

at org.apache.hadoop.conf.Configuration.loadProps(Configuration.java:2914)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2896)

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1246)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1863)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1840)

at org.apache.hadoop.util.ShutdownHookManager.getShutdownTimeout(ShutdownHookManager.java:183)

at org.apache.hadoop.util.ShutdownHookManager.shutdownExecutor(ShutdownHookManager.java:145)

at org.apache.hadoop.util.ShutdownHookManager.access$300(ShutdownHookManager.java:65)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:102)

Caused by: java.nio.file.NoSuchFileException: find-retired-people-scala/target/bg-jobs/sbt_20affd86/target/f5c922ec/359669fc/hadoop-client-api-3.3.4.jar

at java.base/sun.nio.fs.UnixException.translateToIOException(UnixException.java:92)

at java.base/sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:106)

at java.base/sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:111)

at java.base/sun.nio.fs.UnixFileAttributeViews$Basic.readAttributes(UnixFileAttributeViews.java:55)

at java.base/sun.nio.fs.UnixFileSystemProvider.readAttributes(UnixFileSystemProvider.java:148)

at java.base/java.nio.file.Files.readAttributes(Files.java:1851)

at java.base/java.util.zip.ZipFile$Source.get(ZipFile.java:1264)

at java.base/java.util.zip.ZipFile$CleanableResource.<init>(ZipFile.java:709)

at java.base/java.util.zip.ZipFile.<init>(ZipFile.java:243)

at java.base/java.util.zip.ZipFile.<init>(ZipFile.java:172)

at java.base/java.util.jar.JarFile.<init>(JarFile.java:347)

at java.base/sun.net.www.protocol.jar.URLJarFile.<init>(URLJarFile.java:103)

at java.base/sun.net.www.protocol.jar.URLJarFile.getJarFile(URLJarFile.java:72)

at java.base/sun.net.www.protocol.jar.JarFileFactory.get(JarFileFactory.java:168)

at java.base/sun.net.www.protocol.jar.JarFileFactory.getOrCreate(JarFileFactory.java:91)

at java.base/sun.net.www.protocol.jar.JarURLConnection.connect(JarURLConnection.java:132)

at java.base/sun.net.www.protocol.jar.JarURLConnection.getInputStream(JarURLConnection.java:175)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:3009)

at org.apache.hadoop.conf.Configuration.getStreamReader(Configuration.java:3105)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3063)

... 10 more

And my /target/bg-jobs folder is actually empty.

UPDATE 2 (4/19/2023):

I tried Java 8 (similar to Java 11), however, still shows same error:

➜ sbt run

[info] welcome to sbt 1.8.2 (Amazon.com Inc. Java 1.8.0_372)

[info] loading project definition from find-retired-people-scala/project

[info] loading settings for project find-retired-people-scala from build.sbt ...

[info] set current project to FindRetiredPeople (in build file:find-retired-people-scala/)

[info] compiling 1 Scala source to find-retired-people-scala/target/scala-2.12/classes ...

[info] running com.hongbomiao.FindRetiredPeople

Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties

23/04/19 19:26:25 INFO SparkContext: Running Spark version 3.4.0

23/04/19 19:26:25 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

# ...

+-------+---+

| name|age|

+-------+---+

|Charlie| 80|

+-------+---+

23/04/19 19:26:28 INFO SparkContext: SparkContext is stopping with exitCode 0.

23/04/19 19:26:28 INFO SparkUI: Stopped Spark web UI at http://10.0.0.135:4040

23/04/19 19:26:28 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

23/04/19 19:26:28 INFO MemoryStore: MemoryStore cleared

23/04/19 19:26:28 INFO BlockManager: BlockManager stopped

23/04/19 19:26:28 INFO BlockManagerMaster: BlockManagerMaster stopped

23/04/19 19:26:28 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

23/04/19 19:26:28 INFO SparkContext: Successfully stopped SparkContext

[success] Total time: 12 s, completed Apr 19, 2023 7:26:28 PM

23/04/19 19:26:29 INFO ShutdownHookManager: Shutdown hook called

23/04/19 19:26:29 INFO ShutdownHookManager: Deleting directory /private/var/folders/22/ntjwd5dx691gvkktkspl0f_00000gq/T/spark-9d00ad16-6f44-495b-b561-4d7bba1f7918

23/04/19 19:26:29 ERROR Configuration: error parsing conf core-default.xml

java.io.FileNotFoundException: find-retired-people-scala/target/bg-jobs/sbt_e72285b9/target/f5c922ec/359669fc/hadoop-client-api-3.3.4.jar (No such file or directory)

at java.util.zip.ZipFile.open(Native Method)

at java.util.zip.ZipFile.<init>(ZipFile.java:231)

at java.util.zip.ZipFile.<init>(ZipFile.java:157)

at java.util.jar.JarFile.<init>(JarFile.java:171)

at java.util.jar.JarFile.<init>(JarFile.java:108)

at sun.net.www.protocol.jar.URLJarFile.<init>(URLJarFile.java:93)

at sun.net.www.protocol.jar.URLJarFile.getJarFile(URLJarFile.java:69)

at sun.net.www.protocol.jar.JarFileFactory.get(JarFileFactory.java:99)

at sun.net.www.protocol.jar.JarURLConnection.connect(JarURLConnection.java:122)

at sun.net.www.protocol.jar.JarURLConnection.getInputStream(JarURLConnection.java:152)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:3009)

at org.apache.hadoop.conf.Configuration.getStreamReader(Configuration.java:3105)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3063)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:3036)

at org.apache.hadoop.conf.Configuration.loadProps(Configuration.java:2914)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2896)

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1246)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1863)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1840)

at org.apache.hadoop.util.ShutdownHookManager.getShutdownTimeout(ShutdownHookManager.java:183)

at org.apache.hadoop.util.ShutdownHookManager.shutdownExecutor(ShutdownHookManager.java:145)

at org.apache.hadoop.util.ShutdownHookManager.access$300(ShutdownHookManager.java:65)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:102)

Exception in thread "Thread-3" java.lang.RuntimeException: java.io.FileNotFoundException: find-retired-people-scala/target/bg-jobs/sbt_e72285b9/target/f5c922ec/359669fc/hadoop-client-api-3.3.4.jar (No such file or directory)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3089)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:3036)

at org.apache.hadoop.conf.Configuration.loadProps(Configuration.java:2914)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2896)

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1246)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1863)

at org.apache.hadoop.conf.Configuration.getTimeDuration(Configuration.java:1840)

at org.apache.hadoop.util.ShutdownHookManager.getShutdownTimeout(ShutdownHookManager.java:183)

at org.apache.hadoop.util.ShutdownHookManager.shutdownExecutor(ShutdownHookManager.java:145)

at org.apache.hadoop.util.ShutdownHookManager.access$300(ShutdownHookManager.java:65)

at org.apache.hadoop.util.ShutdownHookManager$1.run(ShutdownHookManager.java:102)

Caused by: java.io.FileNotFoundException: find-retired-people-scala/target/bg-jobs/sbt_e72285b9/target/f5c922ec/359669fc/hadoop-client-api-3.3.4.jar (No such file or directory)

at java.util.zip.ZipFile.open(Native Method)

at java.util.zip.ZipFile.<init>(ZipFile.java:231)

at java.util.zip.ZipFile.<init>(ZipFile.java:157)

at java.util.jar.JarFile.<init>(JarFile.java:171)

at java.util.jar.JarFile.<init>(JarFile.java:108)

at sun.net.www.protocol.jar.URLJarFile.<init>(URLJarFile.java:93)

at sun.net.www.protocol.jar.URLJarFile.getJarFile(URLJarFile.java:69)

at sun.net.www.protocol.jar.JarFileFactory.get(JarFileFactory.java:99)

at sun.net.www.protocol.jar.JarURLConnection.connect(JarURLConnection.java:122)

at sun.net.www.protocol.jar.JarURLConnection.getInputStream(JarURLConnection.java:152)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:3009)

at org.apache.hadoop.conf.Configuration.getStreamReader(Configuration.java:3105)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3063)

... 10 more

UPDATE 3 (4/24/2023):

Tried adding hadoop-common and hadoop-client-api, also no luck with same error:

name := "FindRetiredPeople"

version := "1.0"

scalaVersion := "2.12.17"

libraryDependencies ++= Seq(

"org.apache.spark" %% "spark-core" % "3.4.0",

"org.apache.spark" %% "spark-sql" % "3.4.0",

"org.apache.hadoop" % "hadoop-client" % "3.3.4",

"org.apache.hadoop" % "hadoop-client-api" % "3.3.4",

"org.apache.hadoop" % "hadoop-common" % "3.3.4"

)