I am using an internal load balancer in GCP to route the traffic between 2 VMs which are memory sensitive.

Currently for the testing purpose I am trying with only 1 VM. So all the time the traffic routed to single server.

I am using javascript in client to post queries to the load balancer. Example is as given below:

try {

let response = await axios.post(LoadBalancerURL, data,{timeout : 100 * 1000});

// process the response

return;

} catch {

console.log("error in query : ", err);

}

For every query I have given the desired timeout at the client side so that if server does not response within 100 seconds I terminate the connection.

However in this case, the timeout is not getting and the request to the load balancer waits indefinitely and crashes the application.

I have also tested directly by calling the backend VMs, did not face the above issue mentioned.

It would be great help, if someone could help me with root cause and the solution for this issue.

Update 1

Updating the question with more details:

The post query is hosted in a GCP Cloud run service.

Currently I am testing with only one backend server in the load balancer setup, and also I tried sending the query when the server down. As expected I was getting the error response (without delay).

The load balancer setting is as given below:

Backend type : Instance Group

Protocol : HTTP

Timeout : 200 seconds

Rate Limit : I am not seeing any option in the GCP.

Update 2

I have performed few more testing, and below are the findings.

Below is the screenshot from Loadbalancer logs, I see that it is responded back to client (cloud run service after 4.106 seconds).

However, I do not see the same response in the axios response.

Update 3

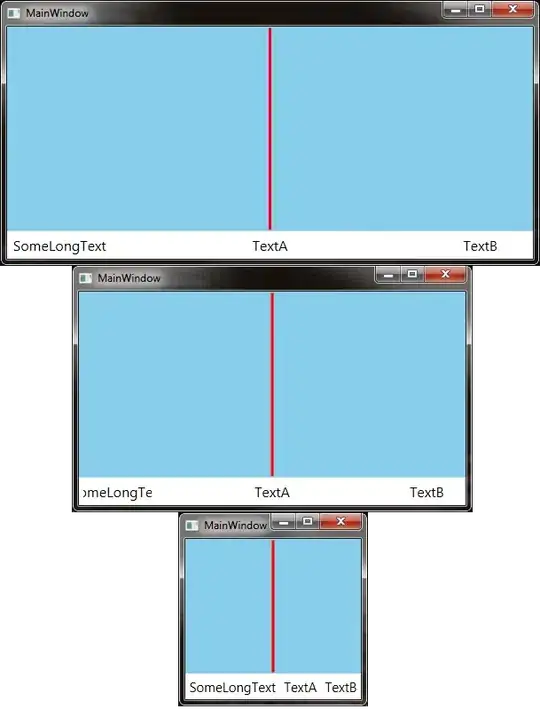

Load balancer backend configuration is as given below:

Balancing Mode is set to utilization with below values.

- Maximimum backend utilization - 80%

- Mamimum RPS - 2

- Score - per instance

- Capacity - 100%

Thank you,

KK